Tiny TinyML

An exploration of running Machine Learning algorithms on really, really small computers.

Click the image below to watch the Tiny TinyML full video!

What is Machine Learning?

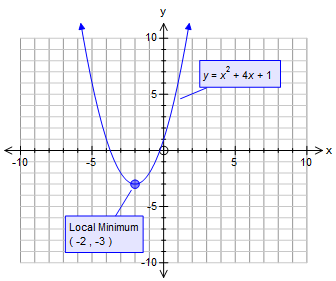

The word Machine Learning has certainly captured the imaginations of Computer Science majors and corporate boardrooms alike. It feels like magic - you take a handful of numbers, throw them at a neural network, and suddenly the computer can play chess or identify a flower or do your job better than you. However, it's not as mystical as it seems; the machine learning problem is something we've been doing since middle school Algebra - finding the maximum or minimum of a graph.

To learn how to solve a problem, the computer first needs to understand what the problem is. We define these problems in terms of Loss functions - a function that tests out our model and tells us how well (or how poorly) we are doing. For example, if we were trying to define a Loss function for the [Identifying Flowers] problem, we might choose to give our model one point when it correctly tells us what flower we're looking at and zero points otherwise. Then, we try to find some maximum (or minimum) on the Loss function by tweaking the parameters of our model - using the gradient of the Loss function to find the quickest way toward the optimal point we're trying to find.

What About Neural Networks?

It's hard to blame people for liking Neural Networks, what with their strange hidden layers and esoteric activation functions. Largely treated like black boxes, they're remarkably flexible, able to take in almost any dataset and draw some conclusion from them. Many other machine learning algorithms either have stringent requirements for the shape of the dataset - i.e. being in a straight line, being separated by a straight line, etc. - or we are required to know how to transform the data to fit the patterns that the algorithm finds acceptable. The former is often rare in life, while the latter is often hard and requires time and effort. However, the neural network suffers neither of these concerns - which is why they're used in almost every machine learning application to this day.

The neural network achieves such flexibility by being more modular than its linear friends. Think of a neural net like a LEGO Death Star. The individual LEGO blocks don't look like much. Some are long, some are short, some are flat, some are slanted. They're great at modelling rectangular things - the back of an eighteen wheeler, a brick, a train if you try hard enough - but they just don't have the complexity to model the organic, free-flowing shapes we see in the natural world. However, if we combine a bunch of LEGO blocks in the right way, after a lot of time and effort, we've built something a lot cooler. In the case of a neural net, it models a bunch of tiny linear models that can be turned on or off at certain points. As you see in the picture below, as you add more and more linear models, the model we end up with - that the neural net learns - looks an awful lot like the ground truth.

That's Great And All, But What Does It Have To Do With Small Computers?

The Achilles' Heel of the fanciest of Machine Learning models is how expensive they are to train. We can teach a computer how to play poker - well enough to consistently beat the best human players out there, actually! - but the only people who have the compute needed to train these models are the research labs of the likes of Facebook and Google (or many of the research labs at Cornell). Even if the select few who have the computer power were kind enough to train models for the rest of us, they only have so many servers and so many hours in a day. A general purpose model trained by Google would be incredible at general purpose tasks, but most use cases for machine learning are not for general purpose tasks. Most of the time, we have one specific thing we want our model to be able to do, whether we want it to recognize our voice when we tell Alexa to buy a new bottle of dish soap or we want it to recognize our faces whether we're wearing glasses or not. In these cases, the models would be running on very small computers. What they learn would also need to be confined to the specific device alone - nobody wants any Joe Schmoe to have access to [The Dummy's Guide to Recognizing [Your Name]'s Face From A Ring Camera]. Hence, the rise of TinyML.

The problem of TinyML is twofold - loading a model on a tiny computer and training a model on a tiny computer. Both require stringent memory management - given the small device size, we must make sure that every byte is used as efficiently as we can. The former task is (from a computer power point of view) generally easier; we can make all the adjustments we need on a PC and give a completed model to the tiny computer. The latter task is more compute intensive - more intermediary math is required to calculate the various losses. This project aims to tackle the latter task, following a paper published by Hanif Heidari and Andrei A. Velichko.

How Are You Doing This?

The LogNNet architecture is a great lightweight build for any enterprising Machine Learning enthusiast looking to solve some simpler tasks - one of which is MNIST. Normally, training a proper object detection model for commercial use (think Face Recognition or Ring) would require a Convolutional Neural Network (CNN) - a much larger model that can adapt to changes in object positioning within an image - but MNIST, being a constrained vision problem, can be solved with a simpler network that can actually fit on our board.

The quick-n'-dirty rundown of the process is that we'll handle as much as we can on the computer, including preprocessing data and training the model, and load a trained model and a sample data point for interpretation onto the FRDM board.

Preprocessing the Data

The MNIST dataset comes from Yann LeCunn's website. I don't know if he's the one that first uploaded it or something, but over the years his site has become the semi-official place to be if you want an authentic MNIST dataset. They come packed in an obscure archive format. Luckily, the dataset is so popular with machine learning beginners that someone has graciously developed a library function for loading these files into Numpy Arrays - a library that I gladly took advantage of.

T-Patterns?

This was published in an earlier paper by A. A. Velichko. When we think of flattening an array, our first instinct is to go row by row. However, in the case of the LogNNet, Velichko found that flattening the image differently was an easy way to boost a smaller model's performance by roughly 30% without consuming too much memory. I did not find an explanation from Velichko as to why this T-Pattern works, but I guess you can't argue with results.

DataSet Class

After preprocessing the data, we're ready to load it into a model for training. However, to keep our data organized, it is useful to have a DataSet class to track which arrays do which things. The DataSet class I implemented kept track of the training and validation data and labels.

A Quick Aside on Testing

I was pretty thorough with the testing on these Python classes - I set up several PyTest cases, the whole shebang. Later model implementation would see more trial-and-error-type testing due to the math involved being troublesome for me to compute on my own.

Henon?

According to Wikipedia, Henon was a mathematician who studied the field of chaotic systems. I don't know much about them; from five minutes of googling, I've learned that chaotic systems are dynamic systems that can either diverge or converge depending on the way certain terms in their equations are scaled. I've always had a soft spot for those Computer Scientists who try to introduce chaos to a machine learning system. Though it sounds very much like the musings of an edgy teenager, there is a legitimate use to introducing chaos to models because models are often trained by greedy optimizers. They're like a stubborn friend who insists on paying for their groceries in cash - while they still get the job done, it takes an awful lot longer than it could, not to mention the inevitable awkward miscounting. A little chaos can encourage the model to explore beyond what immediately looks like the superior option. However, too much chaos can hurt your model's ability to learn anything at all. Figuring out just how much of each you want in your model is an art in and of itself.

The Henon Initializer in the code follows the formula mentioned in the newer paper by Velichko and Heidari, which is itself based on Henon's work with chaotic systems. Based on six different constants, if you had the time and the will to do so, you could run an optimization algorithm on these variables to produce a better model.

LogNNet

At long last, we finally arrive at the actual neural network in question. It's nothing too fancy - it takes in a 28 x 28 image (unrolled into a 1 x 784 tensor) and feeds the pixel values into a five neuron hidden layer. These values are dotted with the weight matrix of these five neurons to produce a 1 x 5 tensor of data, which is in turn dotted with the second weight matrix to produce ten predictive values (one for each integer zero through nine). During training, a sigmoid function is applied to each of these ten values to draw attention to 'close' predictions - answers the model was not 'sure' about. However, when I built the forwarding function for the FRDM board, I chose not to include the sigmoid layer due to the board's precision constraints.

Normally, such a network for a ten-class classification task would feature at least ten neurons in its middle layer; however, the FRDM board only had 32kB of memory. To put the size in context, a single byte of data can store a signed integer in the range [-128, 127] or a signed integer in the range [0, 255]. Meanwhile, a single 32-bit float value would take up at least four bytes, and a model with ten neurons would have 7,840 of them. The first weight matrix alone would take up at least thirty kilobytes. Naturally, such a model would be unusable on the FRDM board, so we use TF Lite to shrink our model down to a manageable size.

TF Lite

Tensorflow Lite is a library within the broader Tensorflow ecosystem built for the express purpose of making larger models accessible to smaller devices. We use it to convert our 32-bit float model into an 8-bit integer model. This new model is then stored in a .tflite file, which is where I ran into a road block. While TFLite (and TFLite Micro) comes with an interpreter for its .tflite files, said interpreter is not supported in C. To get around this roadblock, I wound up using a Neural Network visualization tool called Netron. Netron is a JavaScript application that reads all sorts of model files and visualizes their contents in a convenient flow chart. It can also extract the actual numerical values stored within those files and store them in a .npy file. I then took these .npy files and plopped them into a scrap of python code to format the numbers into a syntactically correct C declaration of an array, which I copy-pasted into a C file.

FRDM At Last?

Finally, now that I had the model weights and the data, all I needed to do was write up a quick dot product scheme and plop all three onto the board. It was then that I realized just how problematic storage of a model could be. See, TFLite stores its weights as signed binary values, which are much more storage efficient than the clunky integers I was using. I did not know that TFLite was so considerate until I found that my petite nine kilobyte model had suddenly ballooned into a forty kilobyte monstrosity. Originally, the LogNNet I developed was supposed to have 25 neurons in its hidden layer, the smallest number of neurons Velichko and Heidari had bothered testing. Unfortunately, I found that a 25 x 784 matrix of signed integers was too much for the poor FRDM to handle, as the SDK refused to let me debug my code until it would not stuff the board to the point of breaking (go figure). After several rounds of trial-and-error, I found that a five-neuron net (without any TFLite reductions!) would perform reasonably well on my computer, predicting the correct answer roughly 85% of the time.

Finally, after repeatedly googling how headers worked and making sure that the memory for each matrix was allocated properly, I was able to get the model to run on the FRDM board. I honestly didn't think that I would get to that point - having been driven half mad by storage issues - but I'm glad it all worked out in the end. Stressful though it was, I enjoyed the project and would like to spend more time on the subject in the future.