Objectives

- Test the localization in the simulation to verify it works correctly

- Run the Update Step in Bayes filter using a real observation loop and real data

- Visualize and discuss the results

Testing the Localization

Below verifies that the provided Bayes filter code works as expected.

Implementing the Observation Loop

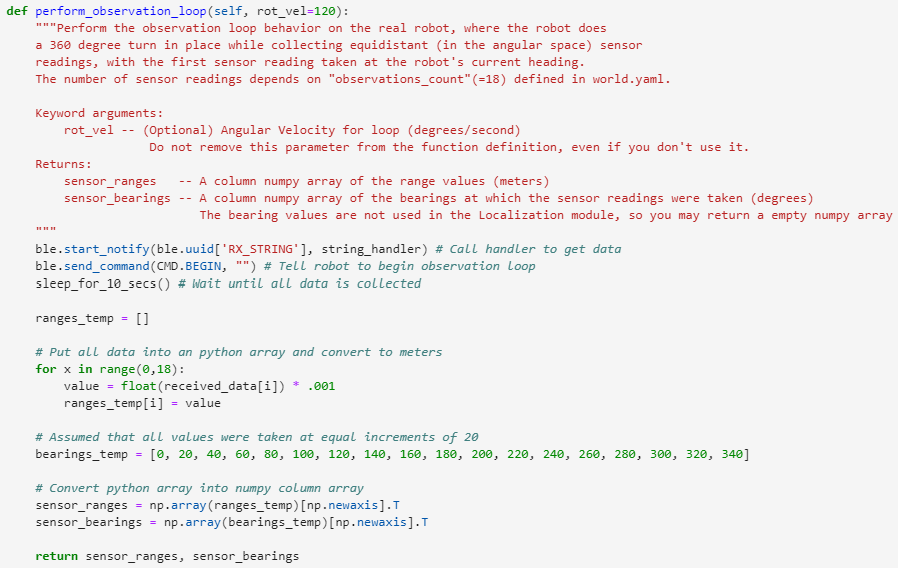

The purpose of the observation loop is to rotate 360 degrees and gather 18 ToF readings around every 20 degrees. These readings would then be ran through the update step of Bayes filter where it would predict where the robot is inside its environment. To begin, I altered my notification handler function to add the received values into a global python array called received_data. When the preform_observation_loop() function is called, it sets up the notifcation handler so it can received incoming data. Then, the robot is notified that it can start its rotation. I estimated that it takes around ten seconds for the robot to finish its full cycle. So, I added a waiting function so that all the incoming data can be properly appended to the received_data array. Then, I converted the data in the received_data from millimeters to meters, and finally, converted the python array into a numpy column array called sensor_ranges. To reduce the complexity of sending data, I elected to assume that my robot turned at equal increments of 20 degrees. Below shows my implementation of the my preform_observation_loop() function.

Running a Real Update Step of Bayes Filter

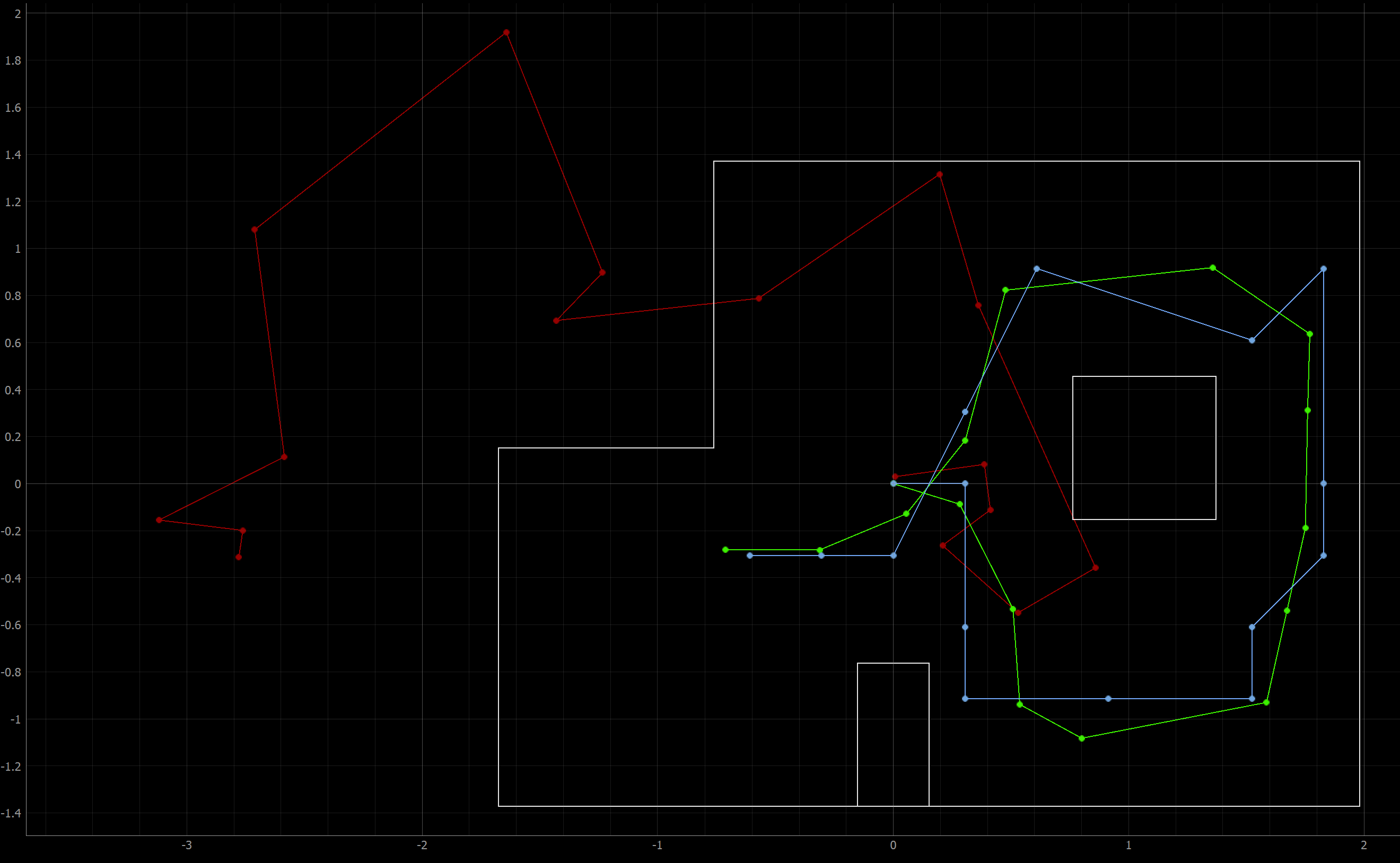

I ran my observation loop at the four marked spots in the lab: (-3, -2), (0, 3), (5, -3), and (5, 3). Below shows where the Bayes filter predicted where the robot is on the plot and the printed data.

Actual: (-3, -2), Predicted: (1, 2)

.png)

data.png)

Actual: (0, 3), Predicted: (0, 2)

.png)

data.png)

Actual: (5, -3), Predicted: (5, -2)

.png)

data.png)

Actual: (5, 3), Predicted: (4, 3)

.png)

data.png)

Video of Observation loop

Analysis

I am very surprised that the Bayes filter worked this well, although I did repeat on each spot until I got suffient predictions. Given that the last lab did not produce the best readings, I would've expected this lab to go the same way, even after fixing my turning. Even so, there are still some variations; the predicted spots are not exactly the same as the ground truth. I had to repeat the update step several times at the point (5, 3) and (5, -3) before I got good results. I believe this is becasue the walls were close and there are a lot of discontinuities in the walls. So, my data could be somewhat inaccuarate because I am using the lond distance sensing mode with the ToFs, and the discontinuites in the walls could be missed sometimes. The point (-3,-2) gave me the most trouble. I could never get it to be very accurate so I had to settle for the poor estimation above. I think that this gave poor results becuase there aren't a lot of distinct landmarks around the (-3,-2) point, so it could be easily confused with another spot on the map. The predicted point of (1,2) has very similar surroundings as compared to the point (-3,-2), so that is why I think they are easily confused. Also, it is important to note that my rotation was still not perfect. It was still very inconsistant in the way it turned, most likely caused by increased or reduced friction and decreasing battery life. For these reasons, I am concerned for how the robot would actually preform when trying to move to predetermined points of the map, because the robot can very easily make a small or large mistake and not recover.