Lab 4/Milestone 4

Objectives:

- Develop an efficient data scheme to store all maze information on an Arduino

- Send accurate maze information wirelessly between Arduinos

- Update the display accurately from the base station

- Robotic Integration, consisting of:

- Working Override button

- Wall sensing operational

- Your robot can sense other robots and avoid them

- Navigation algorithm implemented

- Green LED on robot to signal end of mapping/exploring

Radio Communication:

Our serial communication scheme between the base station Arduino and FPGA requires 12 pins: four for each x- and y- coordinate, and one for each cardinal direction signaling a wall. Thus, the Arduino on the robot must send coordinate and wall information to the base station so that it can be readily translated to the FPGA. Specifically, each bit of information corresponds to an output pin. It does so as follows:

When the robot arrives at an unexplored node in DFS, it sends an array of two bytes to the base station. One byte contains coordinate information (upper nibble nybble for y-coordinate, lower nibble for x-coordinate), and the other byte contains the wall flags (one bit of lower nibble for each cardinal direction). The key is that it sends new information on each tile of the maze as it reaches them.

The robot itself keeps track of its location as an int from 0-8 as it moves, so when it reaches an unvisited intersection we call a helper function that encodes the coordinates into one byte. As for the walls, the robot collects wall sensor data on forward, left, and right surroundings. We must convert this into cardinal directions, so we have a helper function for that as well, which is made possible by the robot keeping track of its orientation. Specifically, it updates a set of global variables for cardinal direction walls with the relative wall data based on its orientation.

Using the “Getting Started” sketch in the RF24 library for reference in initializing the necessary variables and properly calling the radio.read and .write functions, our formula, in summary, is the following: encode coordinate data, convert wall data, encode wall data, concatenate the two bytes into an array, send the byte array.

From there, when the base station arduino receives the data, it writes the sets the output Arduino pins to communicate to the FPGA as we described in Lab 3. It also sends the signal back to the robot so that it knows it properly sent. If the robot does not receive a signal back within 250ms, it times out and resumes its exploration routine.

For testing purposes, we hard-coded a series of data to send to the radio before we integrated the radio program with the rest of the robot.

After successful integration, the result is as shown:

Robot Integration:

Override Button:

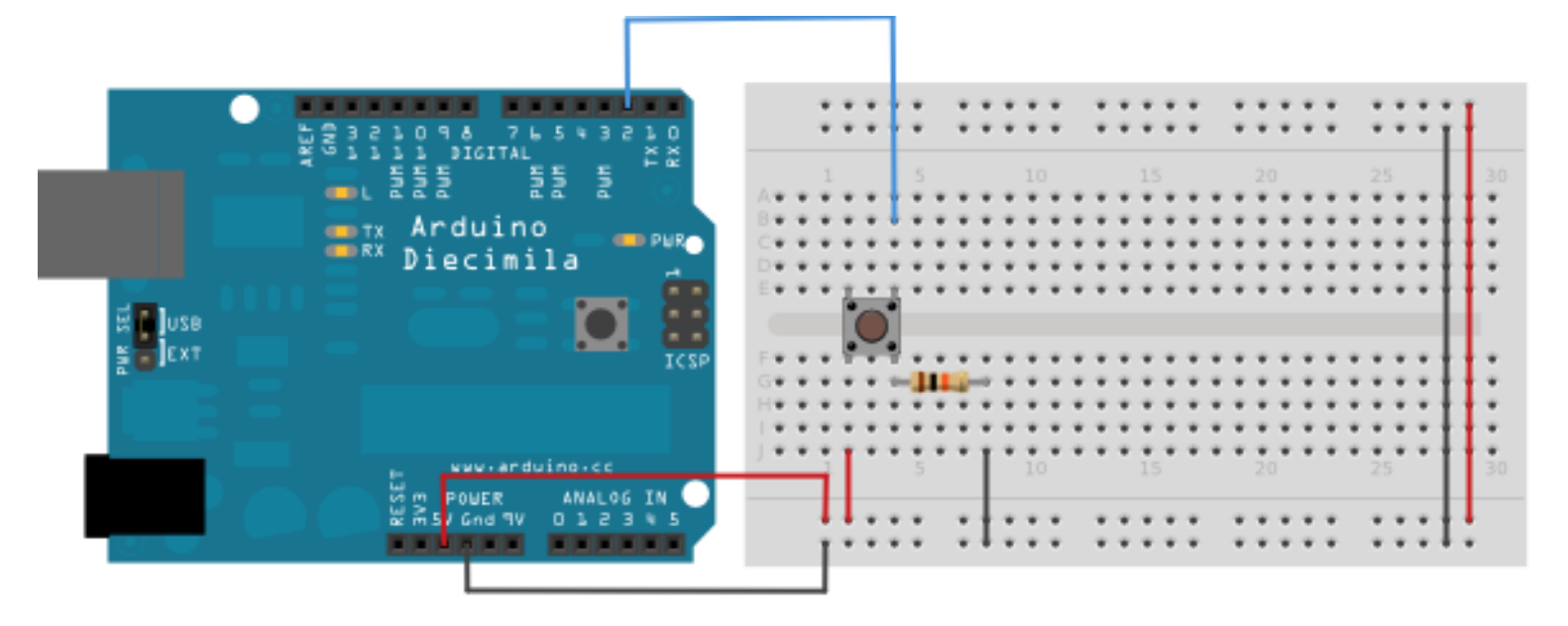

In case the robot failed to detect the 950 Hz starting tone, we added a manual override push-button, which is initially pulled low. The pin is connected to digital pin 7 through a 10 kΩ resistor and read at the start of the loop. After the robot is reset, the DFS algorithm would start if the tone is detected or the button is pulled high.

Wall Sensing Operational

Our wall sensing code remains the same as in Milestone 2. The robot polls each of the three wall sensors for analog data every time it arrives at an intersection. The navigation algorithm then uses this information to decide which direction the robot should explore next. We recalibrated the wall sensors to prepare the robot for competition. During this process, we determined that the front wall sensor was malfunctioning and fluctuating wildly between values, so we switched out the sensor for a long-range wall sensor instead.

Robot Detection and Avoidance

Our robot detection hardware setup remains the same as in Milestone 3. We use a bipolar junction transistor (BJT) to build an amplifier circuit for the 940 nm IR phototransistor, allowing us to use the signal as a digital input. We mount the phototransistor five inches above the floor, facing forward and parallel to the floor.

When the phototransistor detects an IR LED directly to its front, the input signal to the Arduino switches high. We continuously poll this signal while the robot is following the lines between intersections. If the signal goes high at any point during this time, the robot will take evasive action when it arrives at the intersection. Once a robot detection has been handled, we clear the bit for a detection and continue normally with the DFS algorithm.

When a robot is detected in front, we first check if there is an opening on the left or right that we have not yet explored. If so, we simply turn and continue normally with the navigation algorithm. If neither of these directions is open, the robot turns around and retraces its steps until it finds a parent node that has unexplored child nodes. However, since the robot has not finished exploring the path it turned back from due to a robot detection, it must be able to find its way back. We leave a “breadcrumb trail” during backtracking by setting each of the bracktracked nodes to unvisited so that robot will be forced to explore the path again later. If our robot detects another robot while it is backtracking, we simply must wait for the other robot to move out of the way. To do this, the robot performs a simple maneuver in which it turns around, travels back one step, and then turns around again to return to where it previously saw the other robot. It repeats this maneuver until the other robot is no longer detected.

Navigation Algorithm

The navigation algorithm remains the same as in Milestone 3. In summary, we implemented a depth-first search (DFS) algorithm that recursively explores each of the intersections in the maze. Using a 9x9 boolean array to keep track of whether or not an intersection has already been visited, the algorithm visits as many intersections along a path as it can before it must turn around and return to a previous node. We prioritize the forward direction because it does not require additional time to make a turn. When the robot has explored all unvisited nodes, it will return to its start node and finish mapping the maze.

Green LED

We added a green LED connected to digital pin 2 to signal the end of mapping the maze. There are two possible maze scenarios to account for: a maze with at least one unaccessible intersection (completely walled off), or a maze that can be completely explored. It is easy to detect when the robot has finished mapping the maze in the latter case since we can simply check the boolean array of visited nodes every time the robot arrives at an intersection to see whether the robot has visited every node. Indeed, we include a function that checks this condition at the beginning of the DFS function. However, if there are walled off areas, it is much more difficult to immediately detect when the robot has visited every possible node because we cannot check the visited array. It would also be difficult to compute whether there are any completely closed off areas because these areas can be any shape and size. Therefore, for this case we simply turn on the green LED once the robot has returned to its starting node and there are no more unexplored child nodes.