In Lab 3, we were tasked with implementing and testing passive and active filters using the hardware that you have on hand, combined with your computer and MATLAB, and compare them to what is theoretically predicted. We also implemented and tested a bandpass filter that will be used on our robots in Lab 4.

My Procedure for this Lab

- Sections 0-3 (04/06): Answer Questions on Canvas, Use LTSpice to Draw Low Pass and High Pass Filters, Build the Microphone Circuit, and Code the Arduino and MATLAB to Characterize Circuits

- Sections 4-6 (04/13): Improve the Microphone Circuit, Test my Low Pass and High Pass Circuits, and Create a Bandpass Filter

- Sections 7-9 (04/20): Setup FFT on Arduino, Complete Lab and submit deliverables.

Answering Questions on Canvas

| Question |

My Answer |

| Consider using TCA in a simple application of a periodic interrupt, as discussed in class. In this problem, you want to toggle the onboard LED every 135 ms (i.e., the LED is ON for 135 ms, then OFF for 135 ms, etc.). What is the number of clock ticks between the moment when the timer starts and the moment when the interrupt is triggered after 135 ms assuming that you use a TCA prescaler of 16 and your Nano's operating frequency is 16 MHz (no prescaler is used for the main clock)? |

You cannot have this period of 135 ms with the given parameters. |

| Given that your Nano's operating frequency is 16 MHz (no prescaler is used for the main clock), what is (approximately) the longest possible achievable interrupt period for TCA? |

4.096 millisecs |

| You have an application that involves using TCB (instance 0) in the Input Capture on Event mode. You want to use the Noise Cancellation feature of TCB, and you want the event edge detector to trigger the capture on falling edges. Which of the following (choose all that apply) can be used to set the appropriate register bits to set the three requirements above (Input Capture on Event mode, Noise Cancellation feature, trigger the capture on falling edges)? |

TCB0.CTRLB |= TCB_CNTMODE_CAPT_gc;

TCB0.EVCTRL |= TCB_CAPTEI_bm;

TCB0.EVCTRL |= TCB_EDGE_bm;

TCB0.EVCTRL |= TCB_FILTER_bm;

TCB0.CTRLB |= TCB_CNTMODE_CAPT_gc;

TCB0.EVCTRL = TCB_CAPTEI_bm | TCB_EDGE_bm | TCB_FILTER_bm;

|

Final Results, Summary & Takeaways, Next Steps

Final Results:

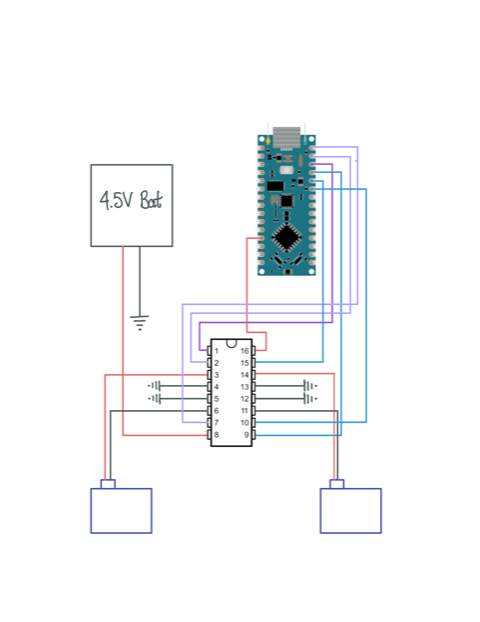

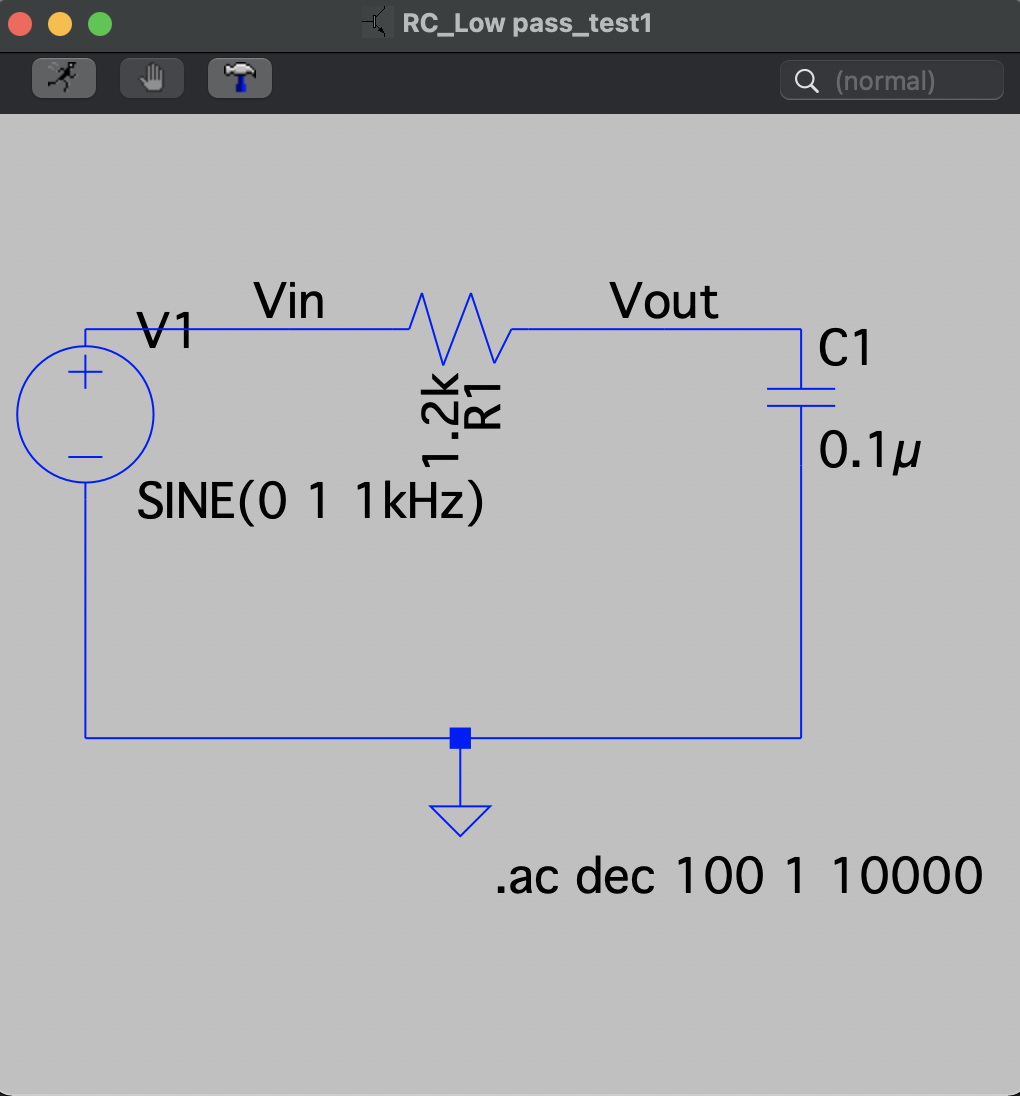

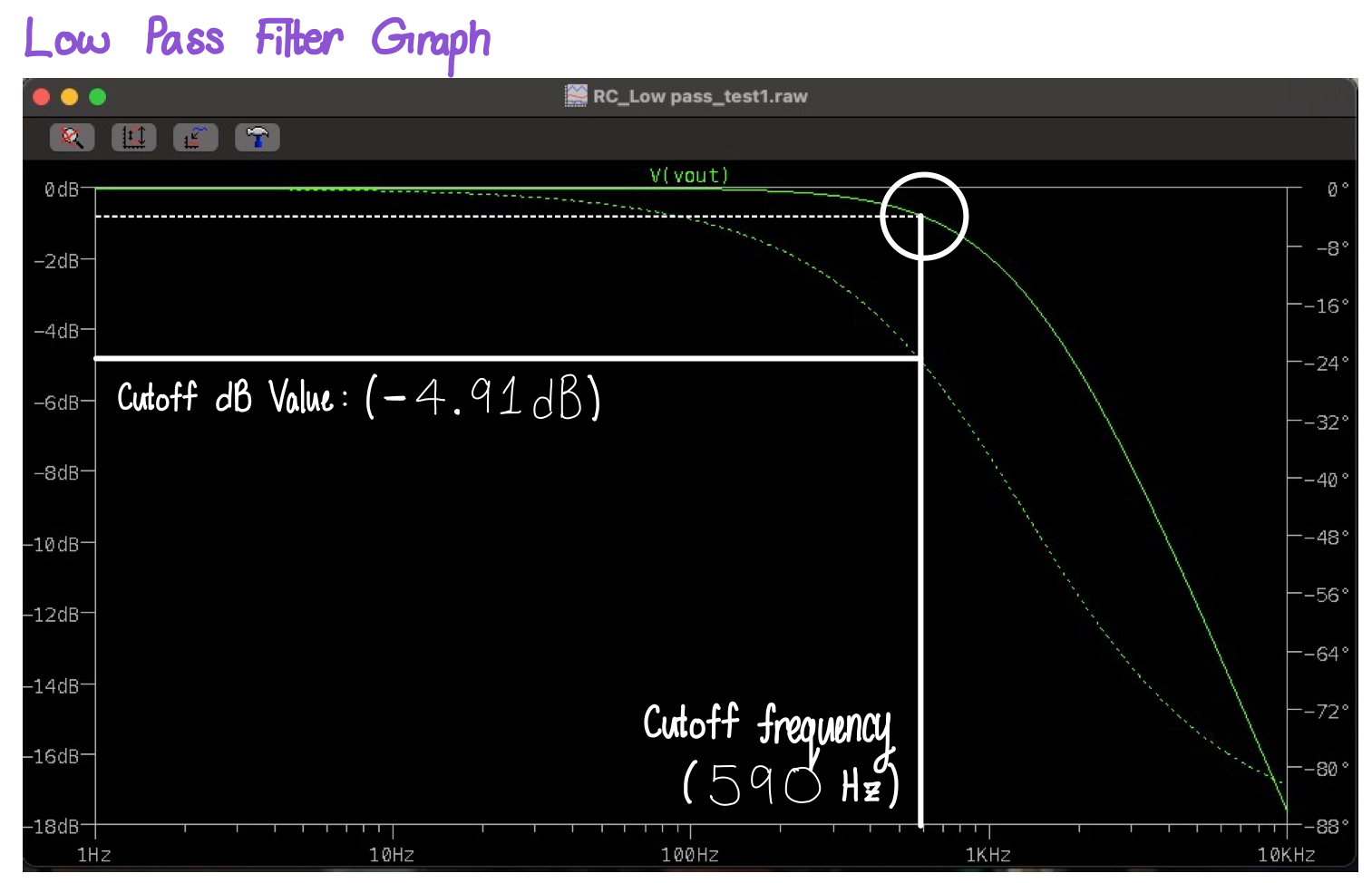

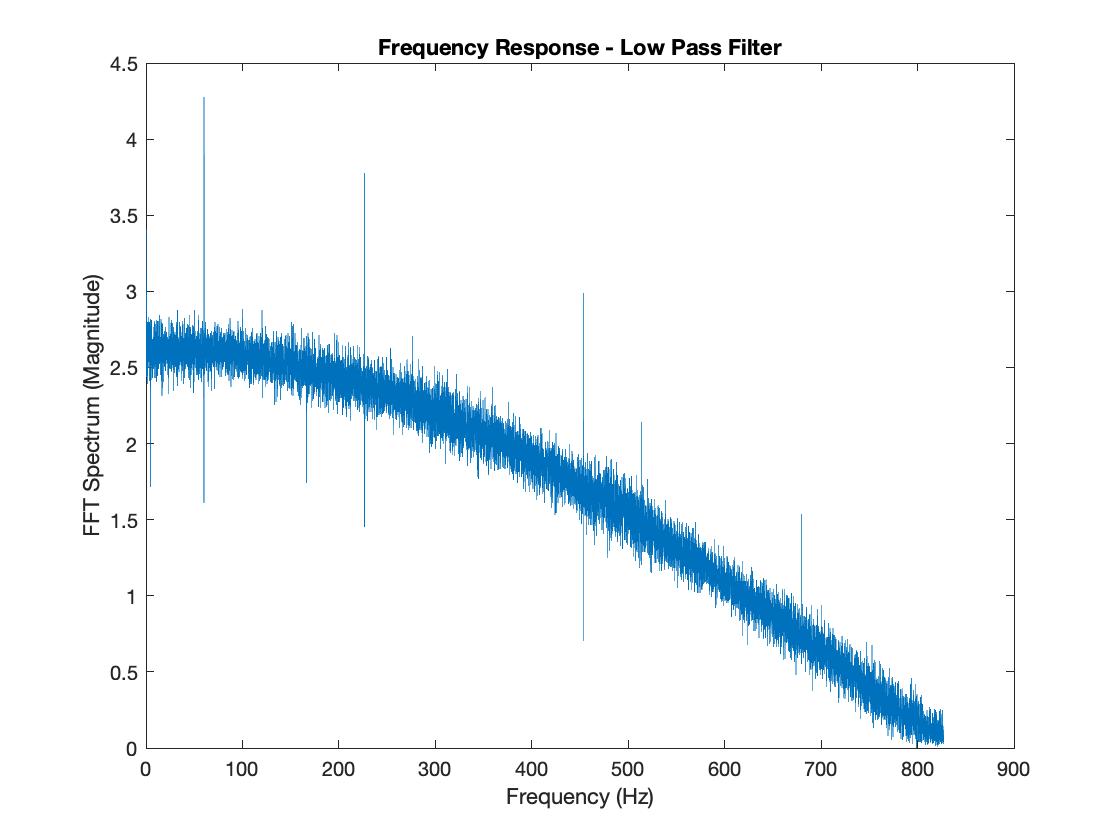

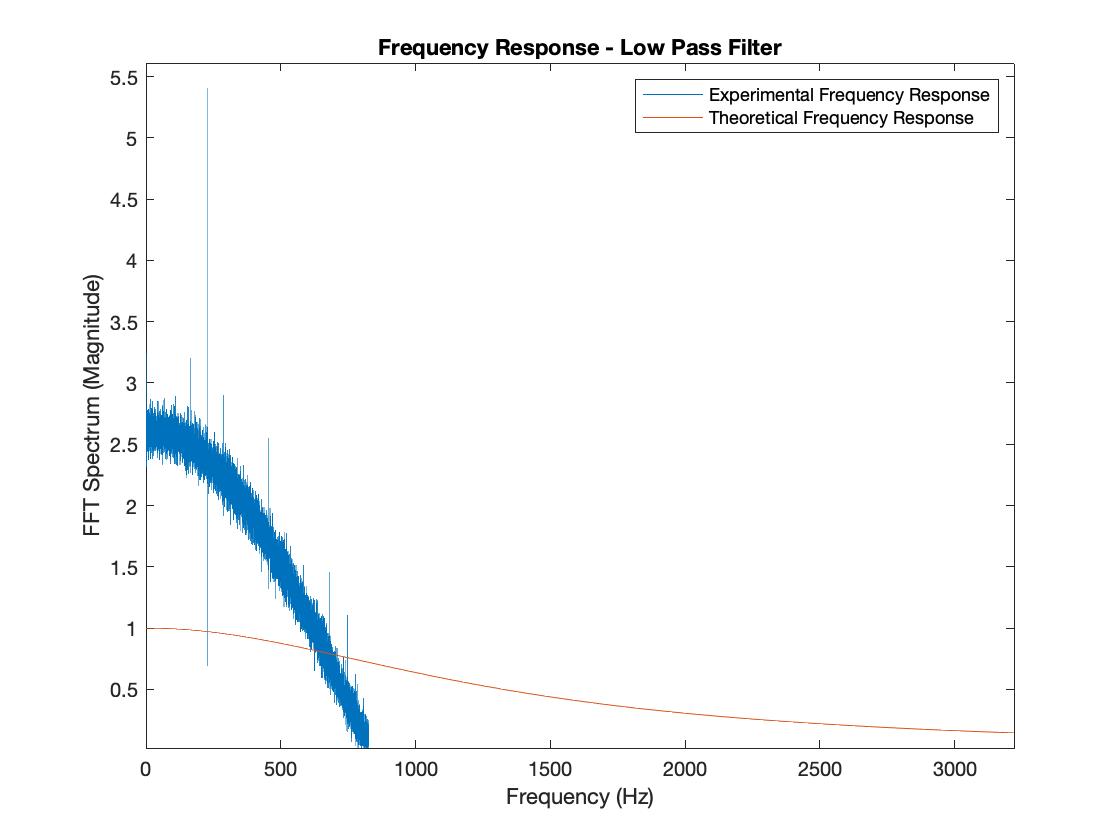

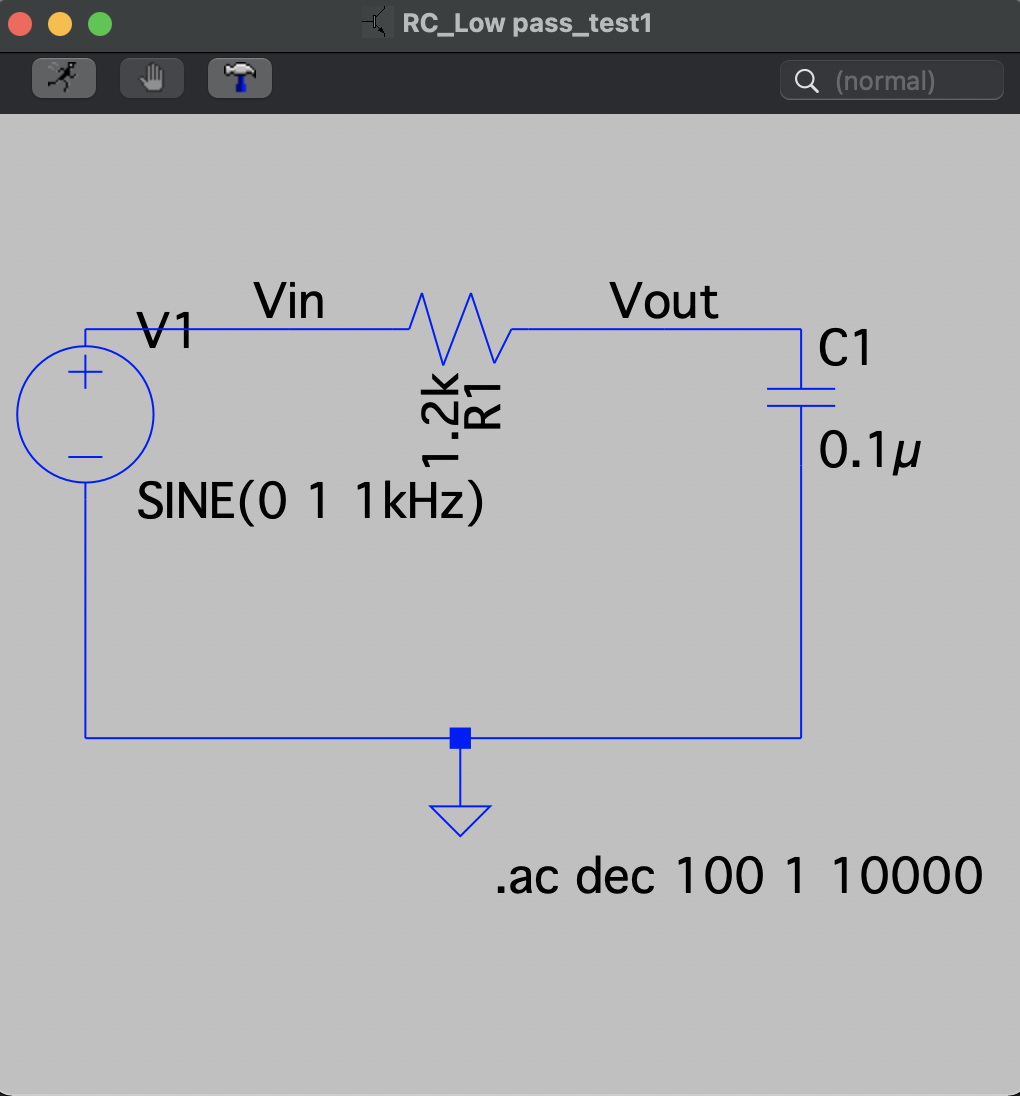

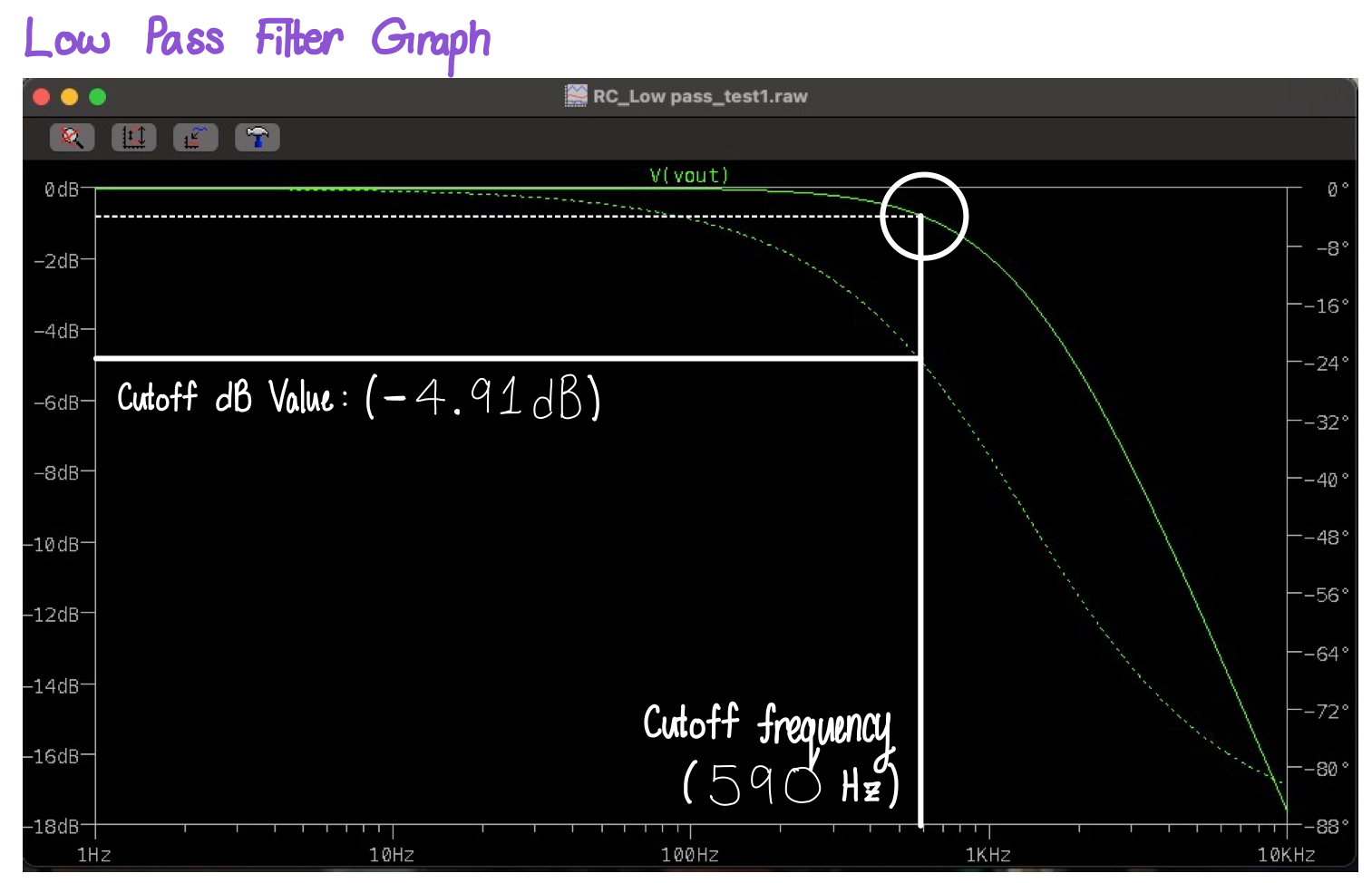

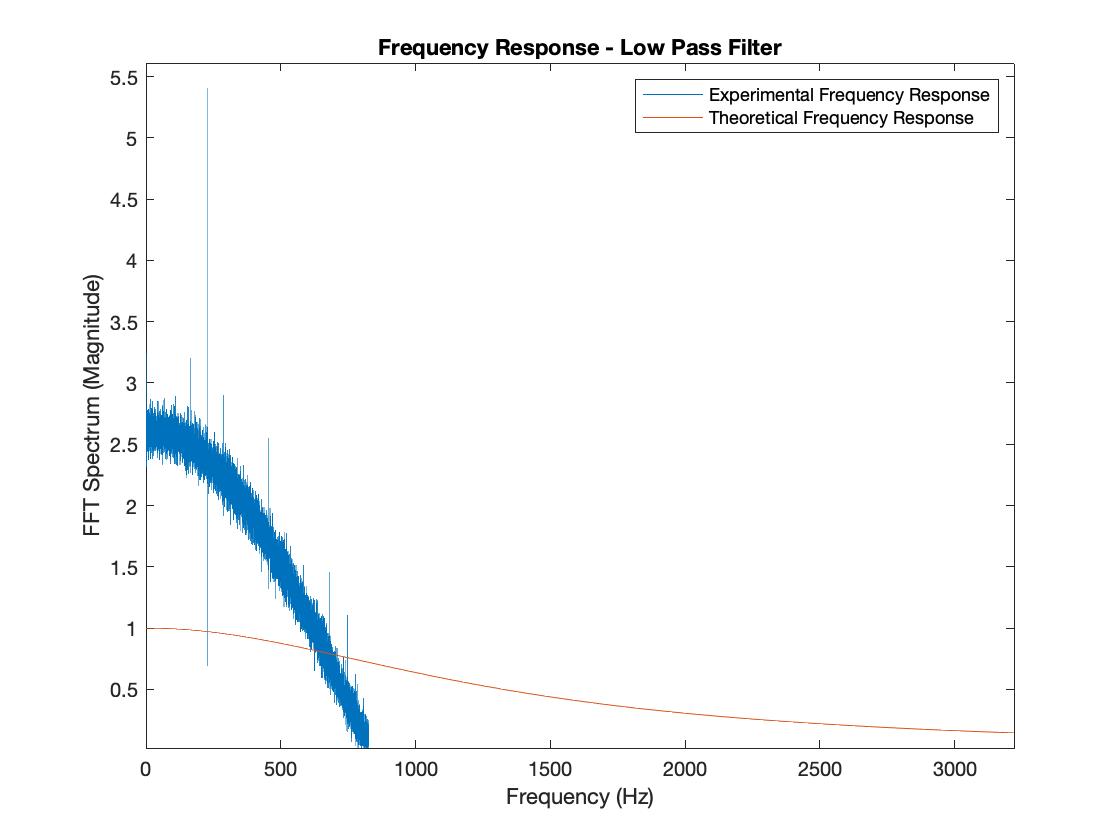

Picture 1: Low Pass Filter

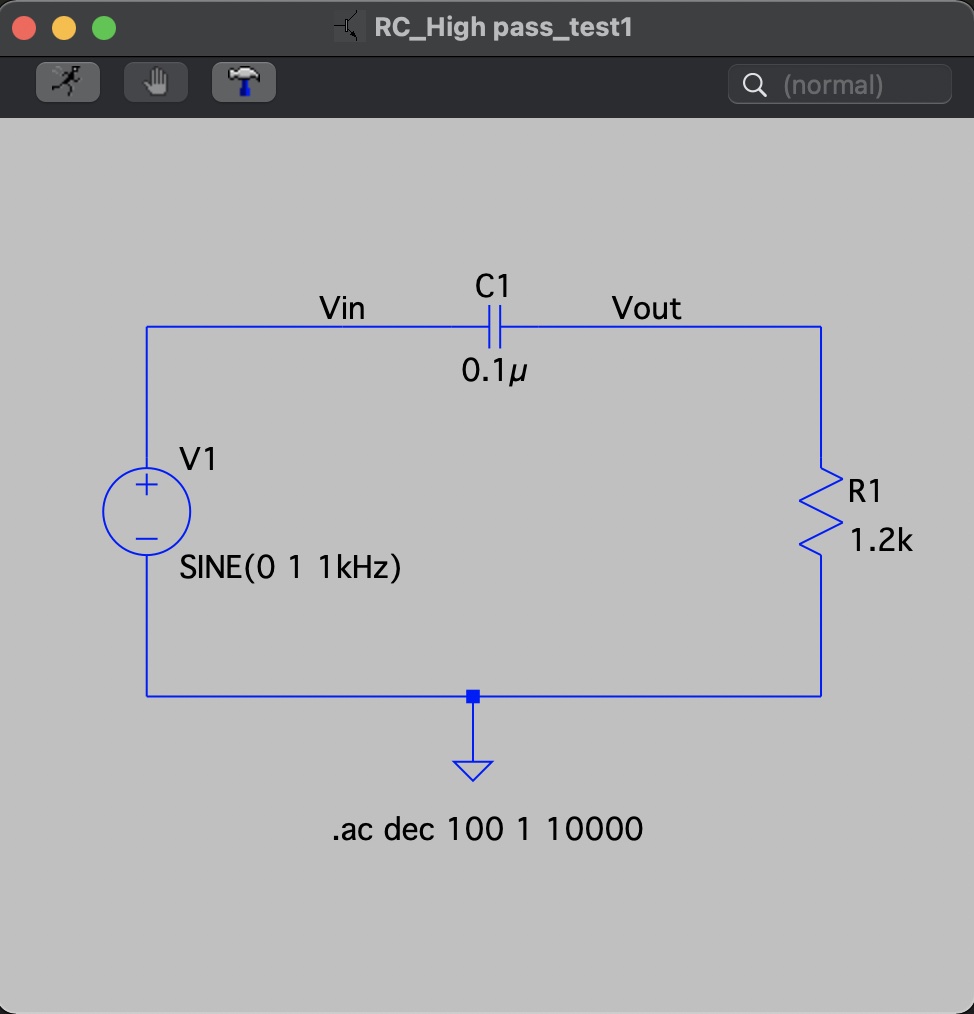

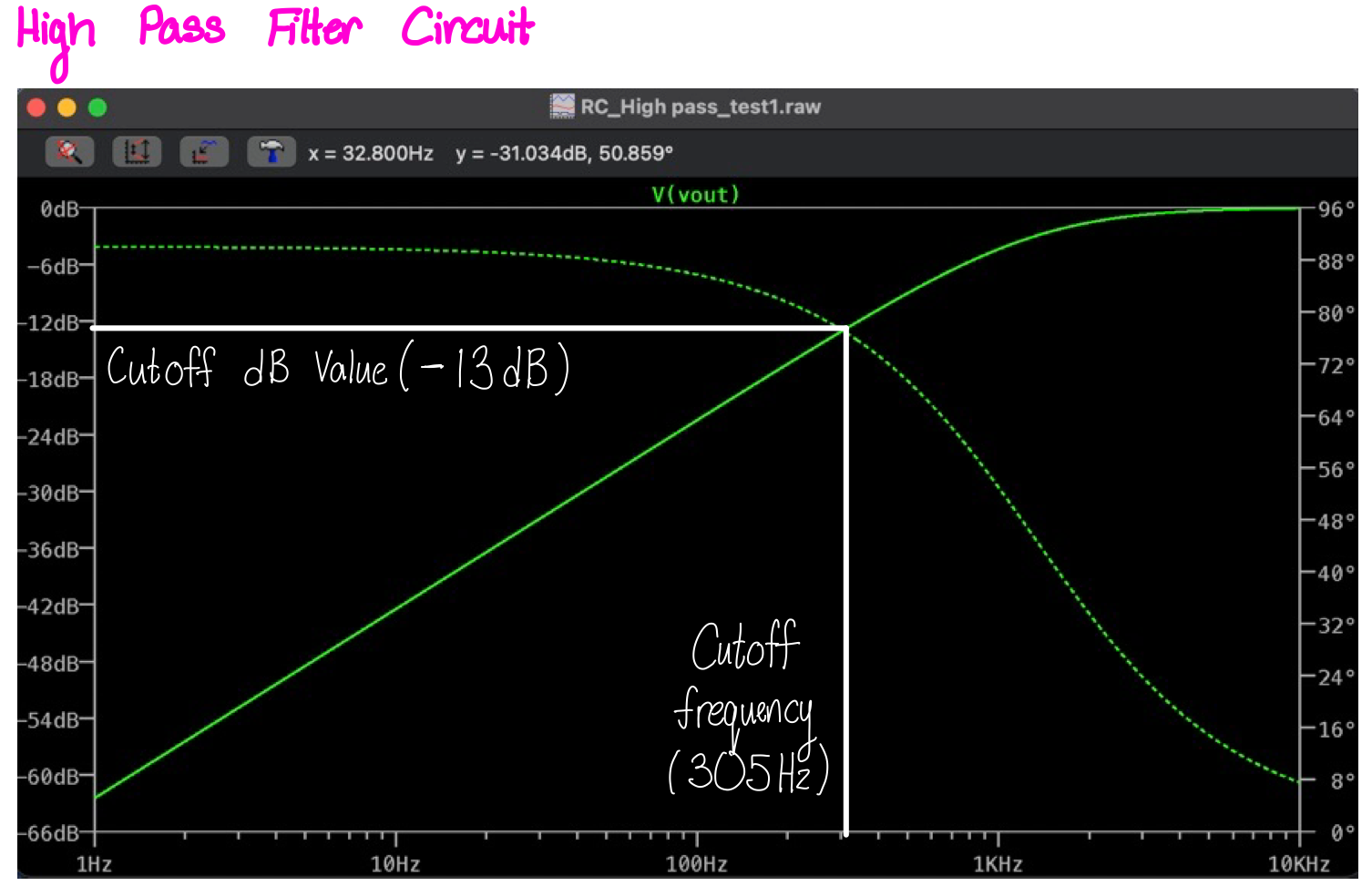

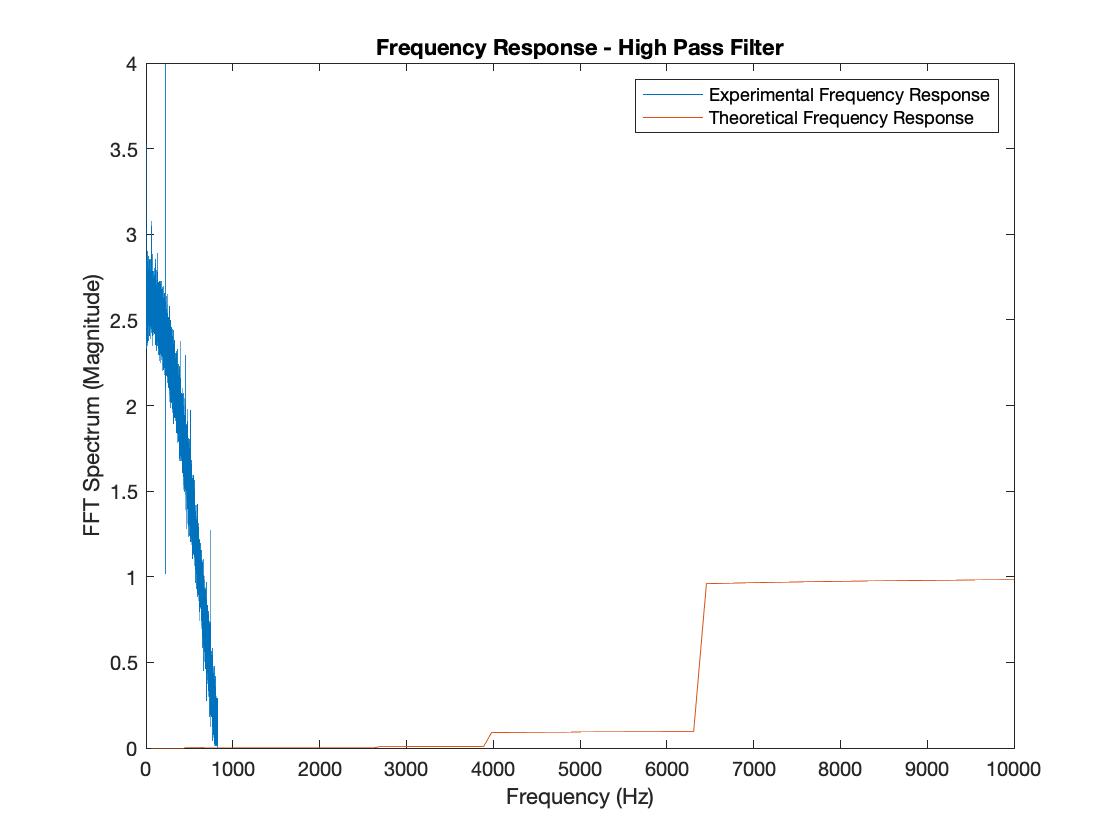

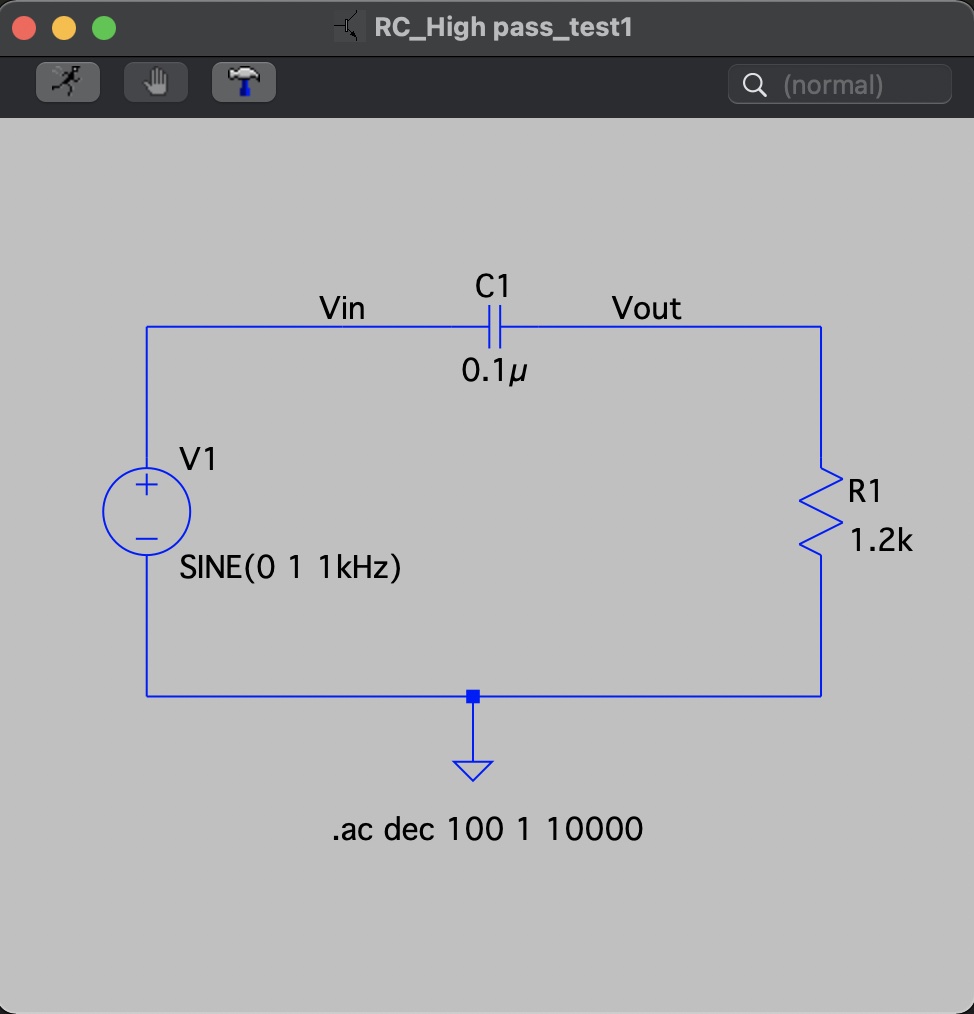

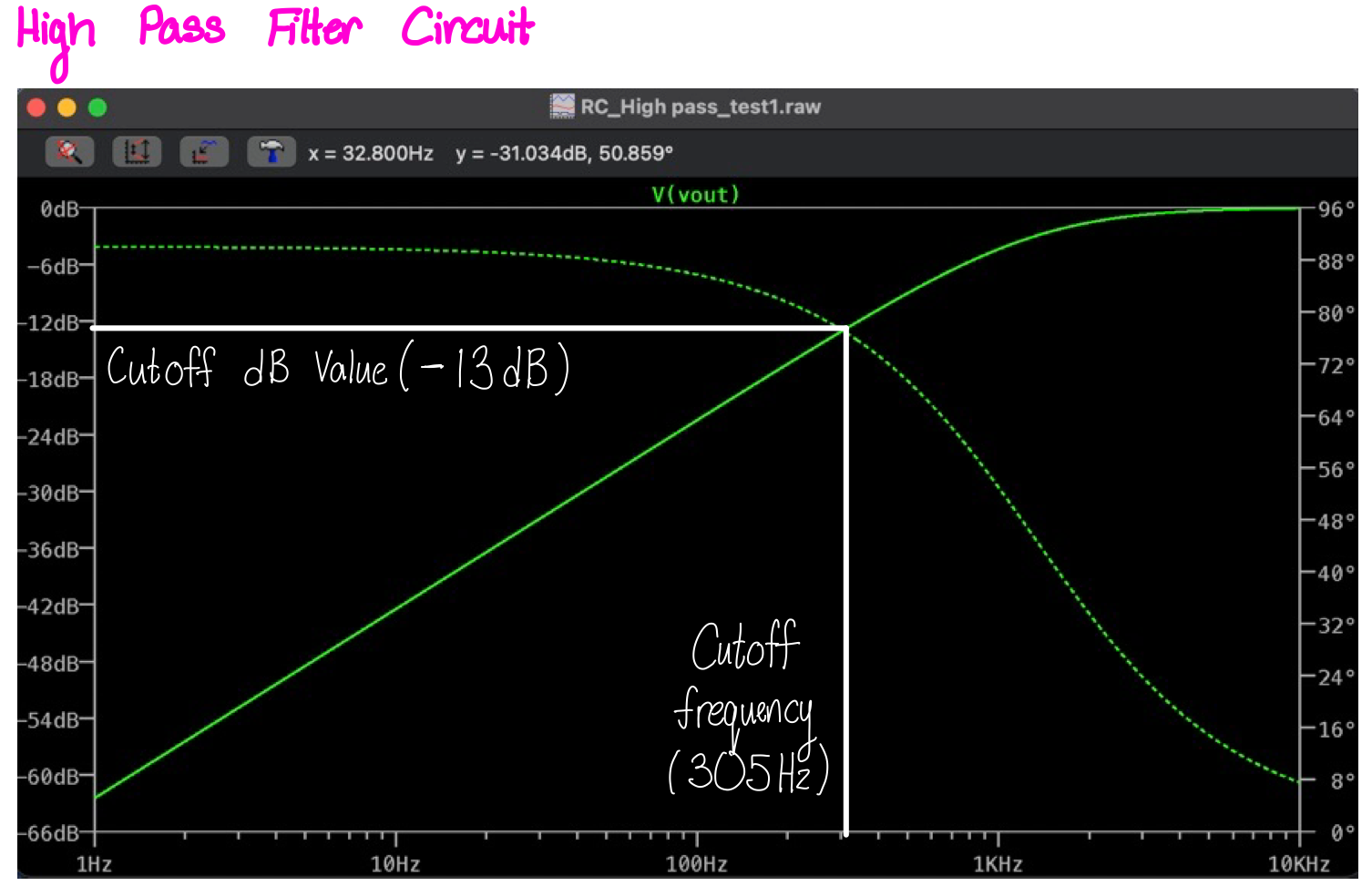

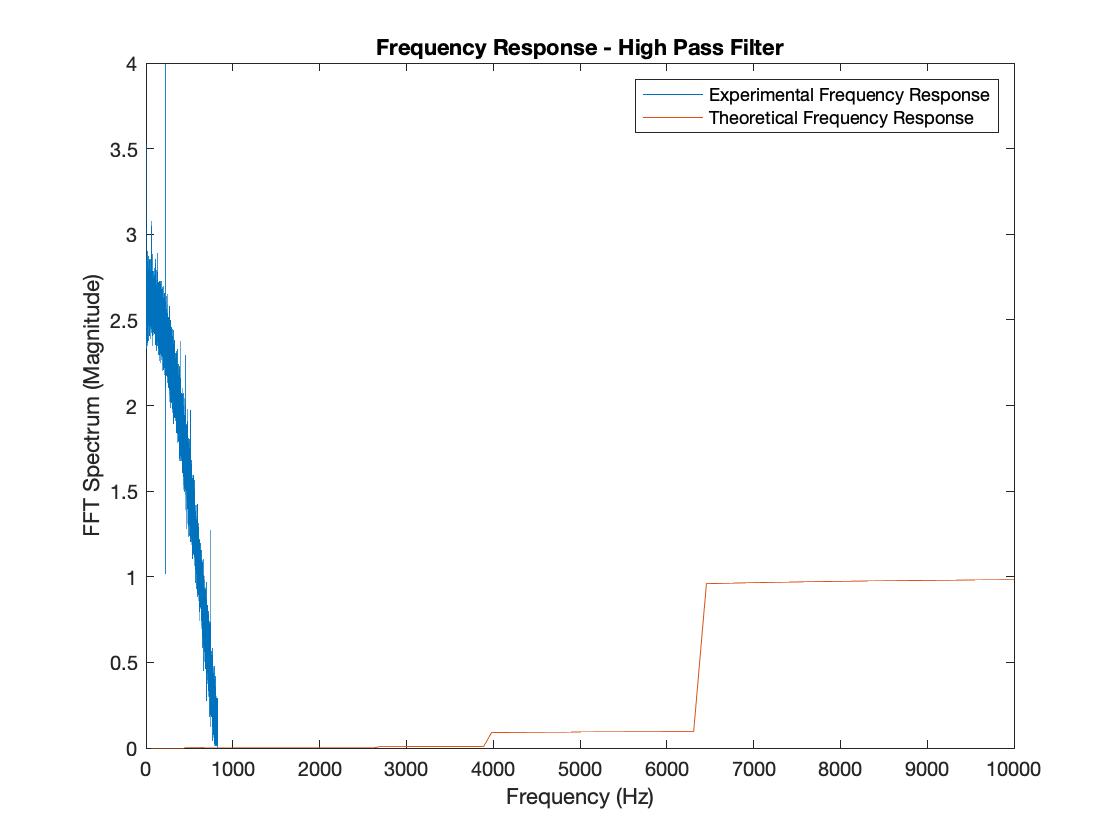

Picture 2: High Pass Filter

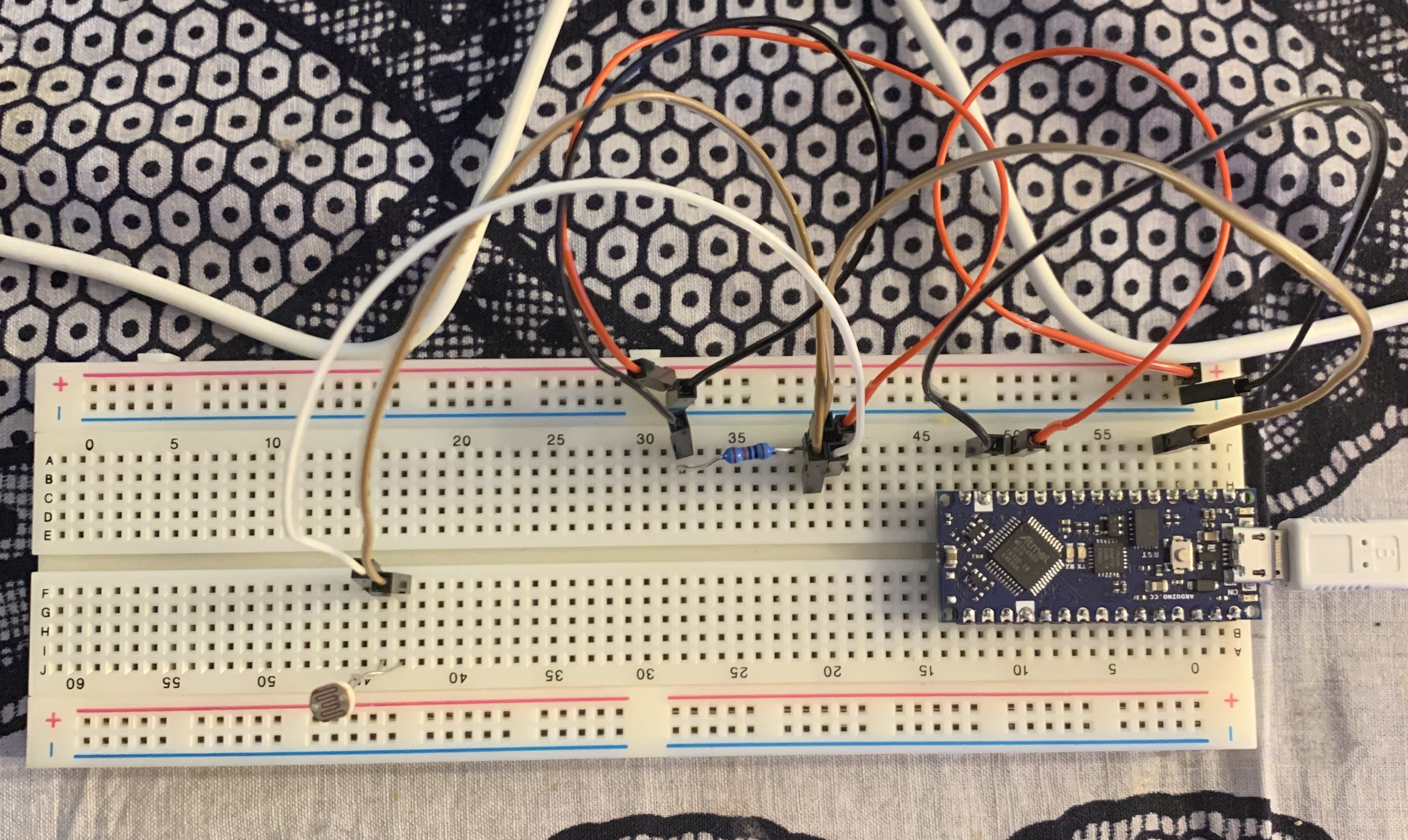

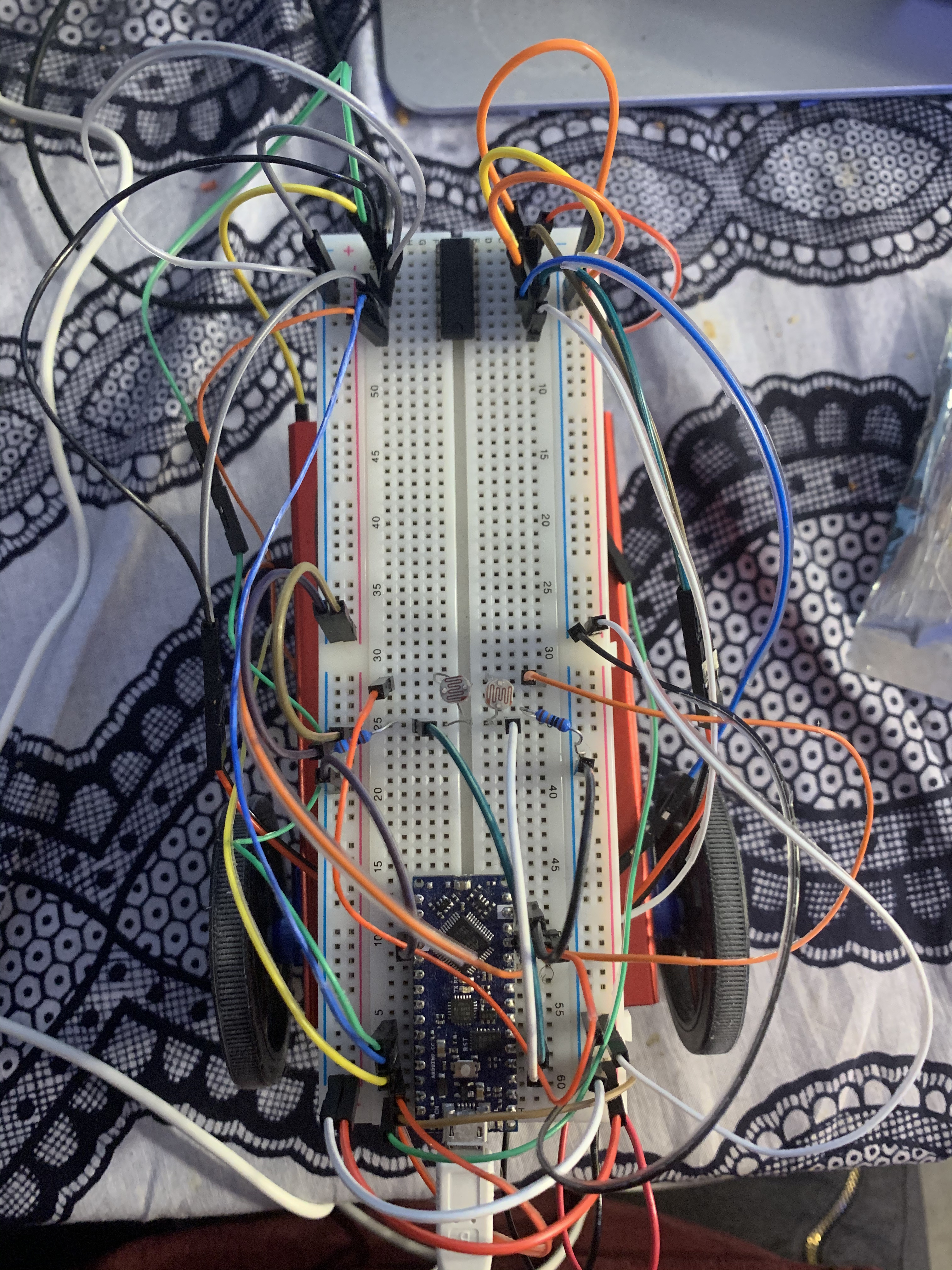

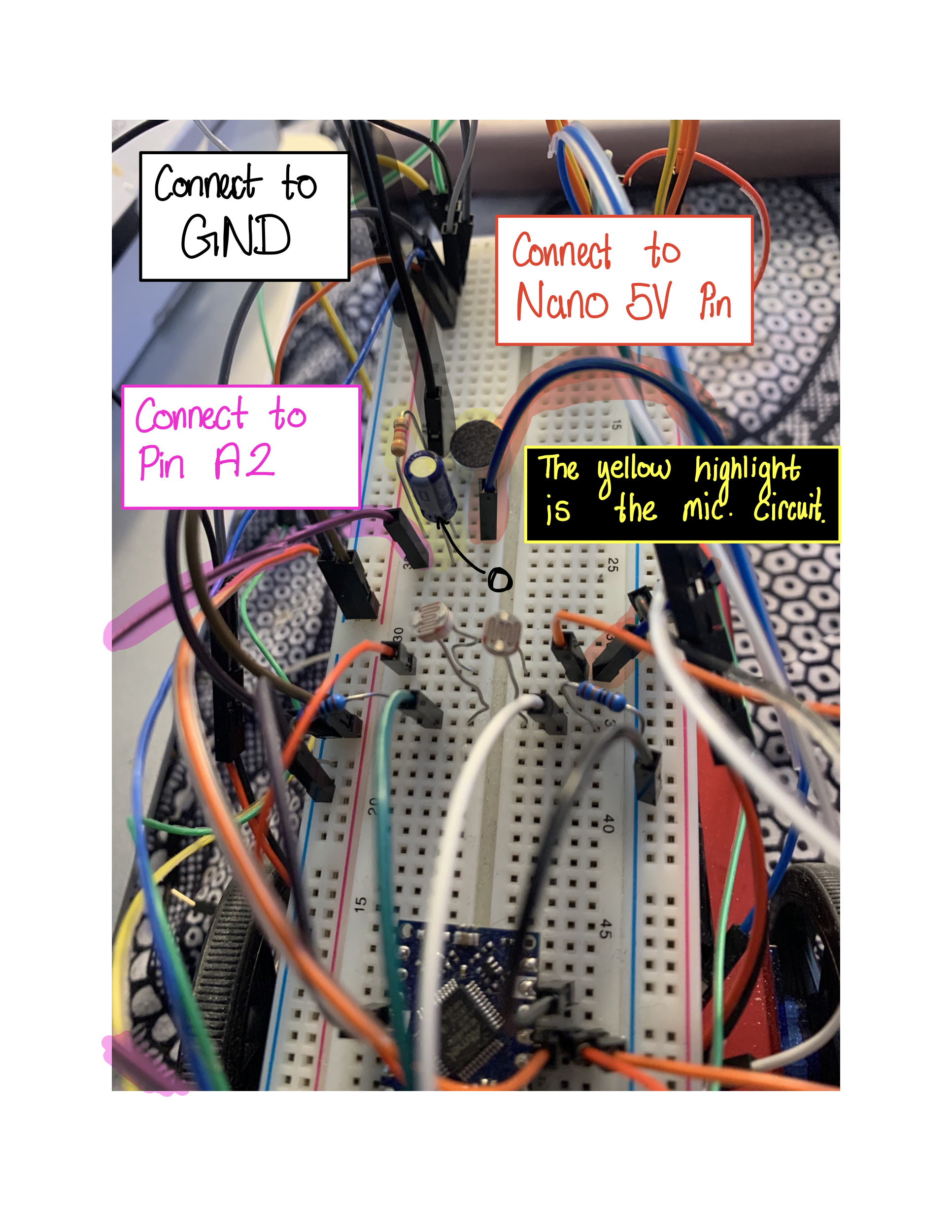

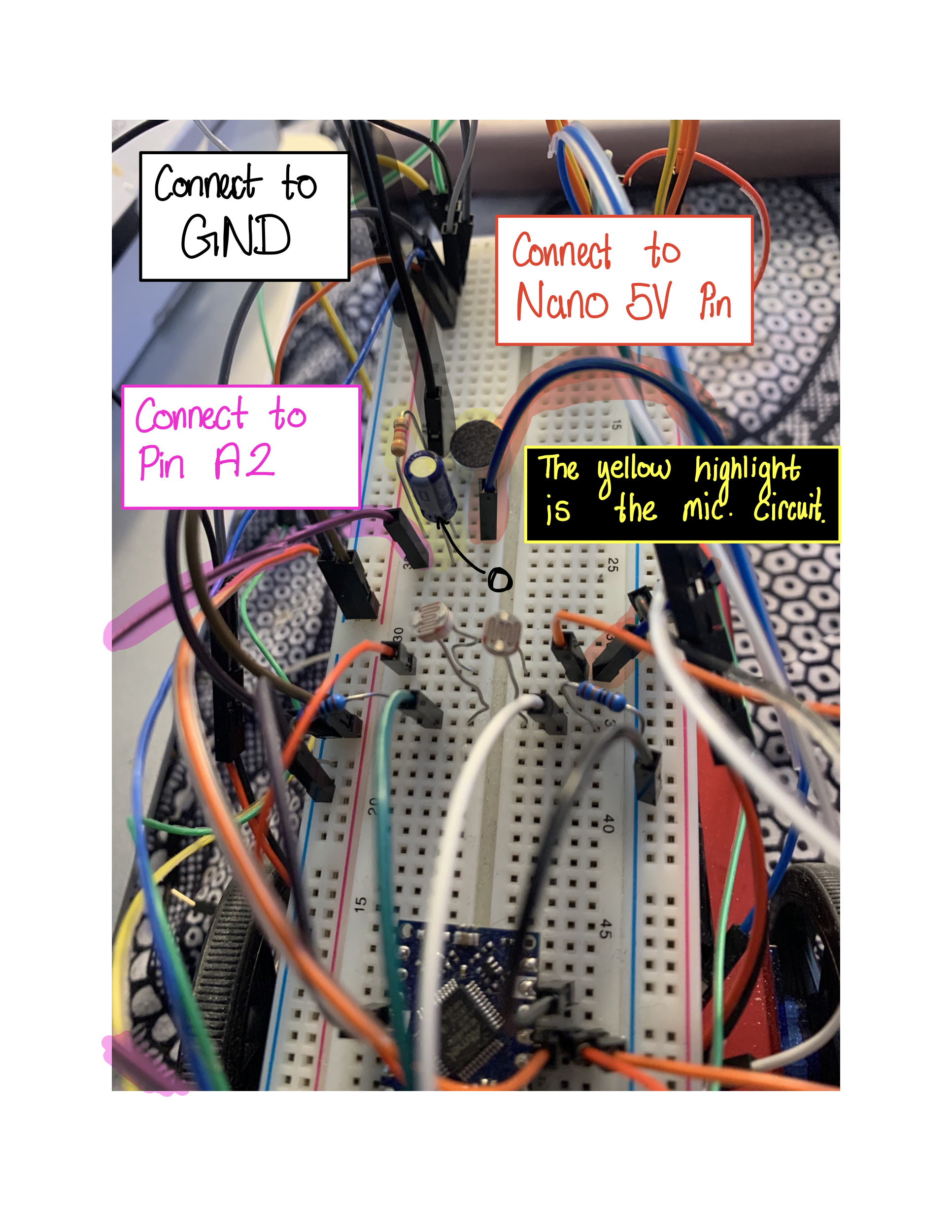

Picture 3: Basic Microphone Circuit

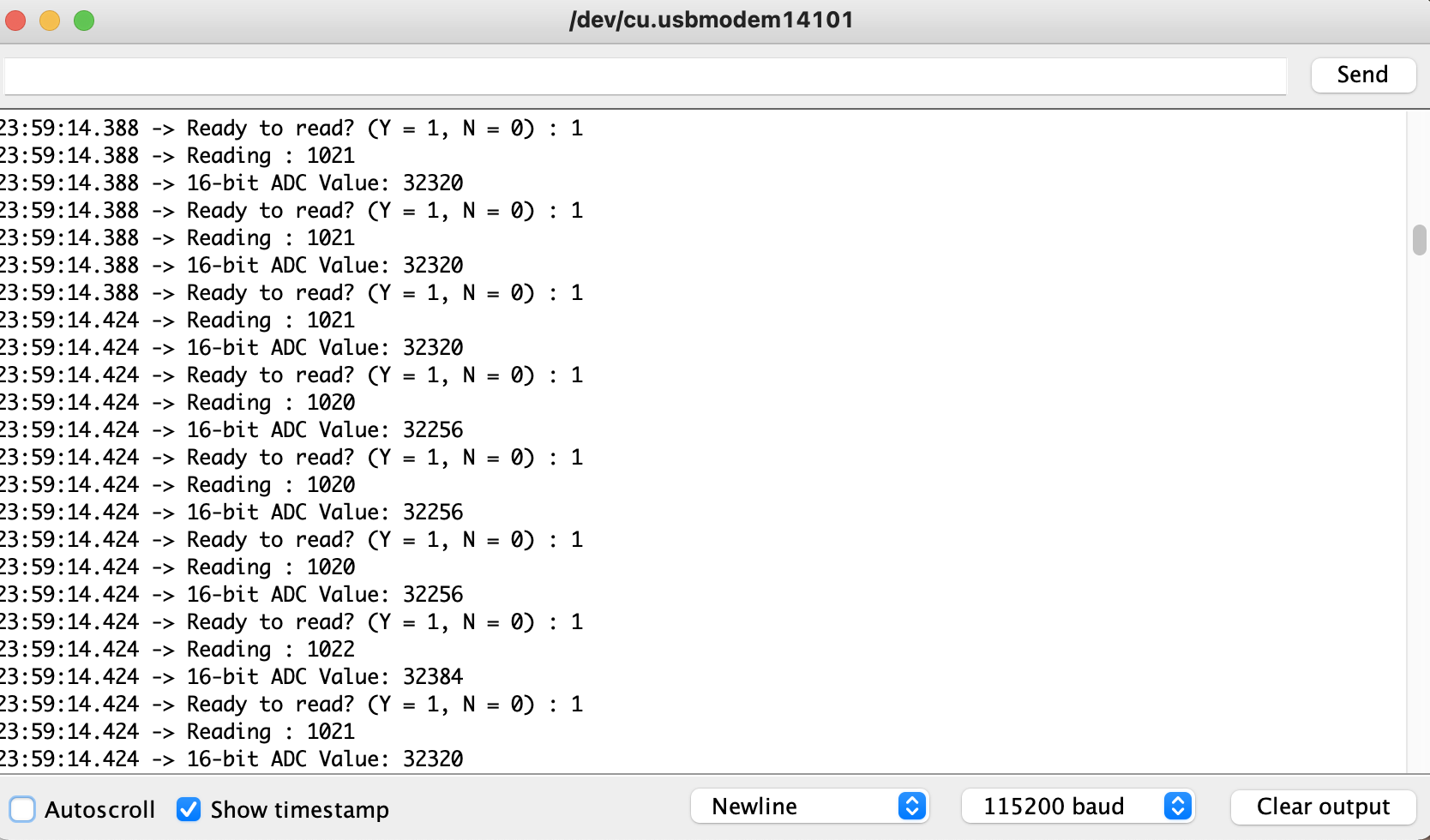

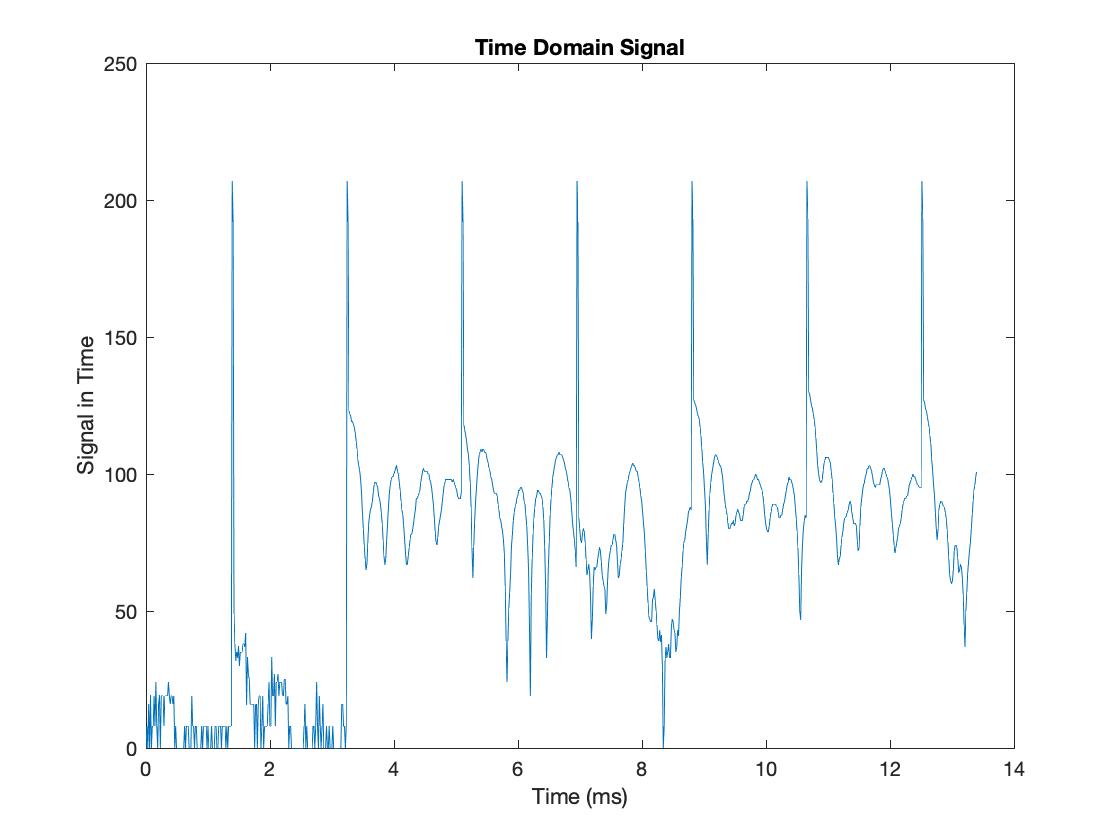

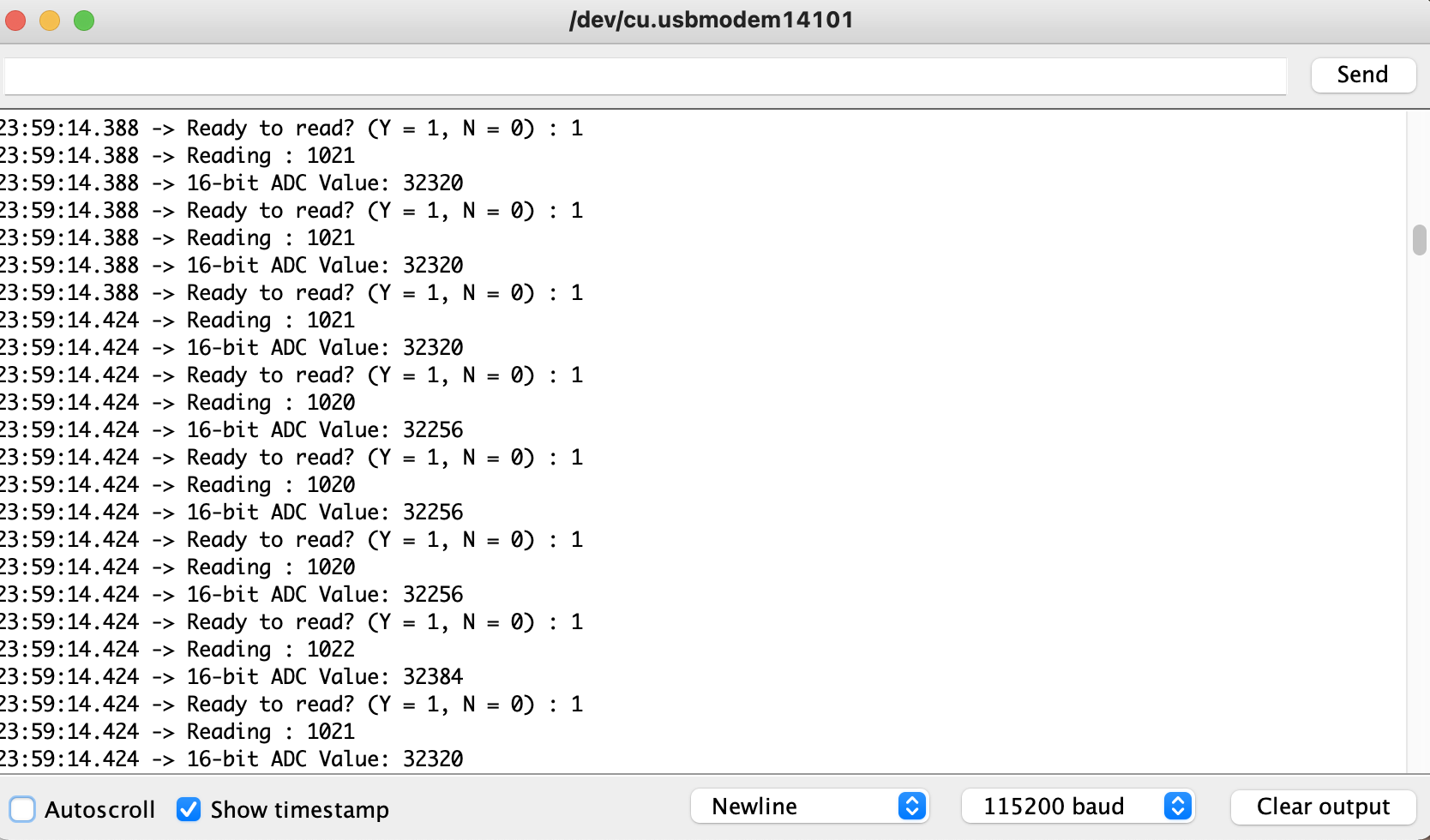

Picture 4: Graph of the data read for the Basic Microphone Circuit

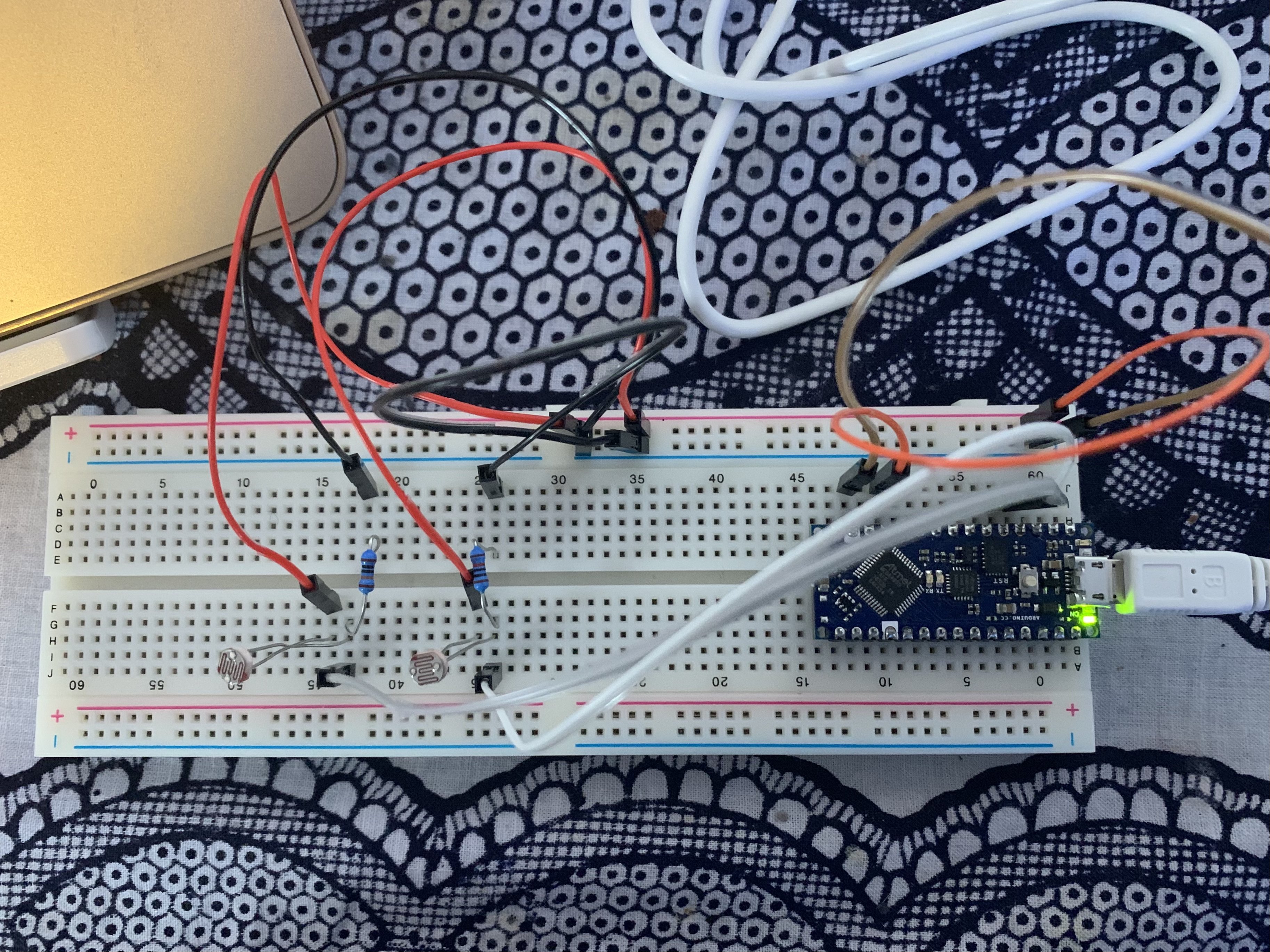

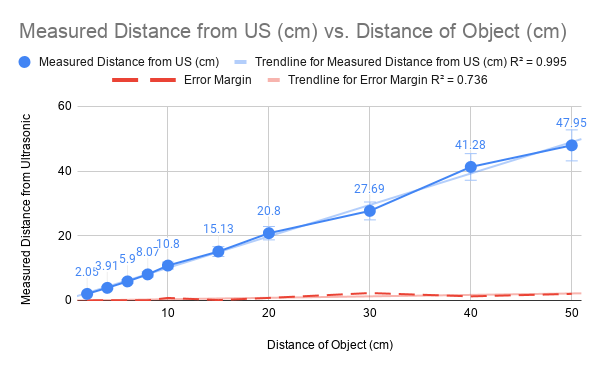

Picture 5a-b: Amplified Microphone Circuit & Graph

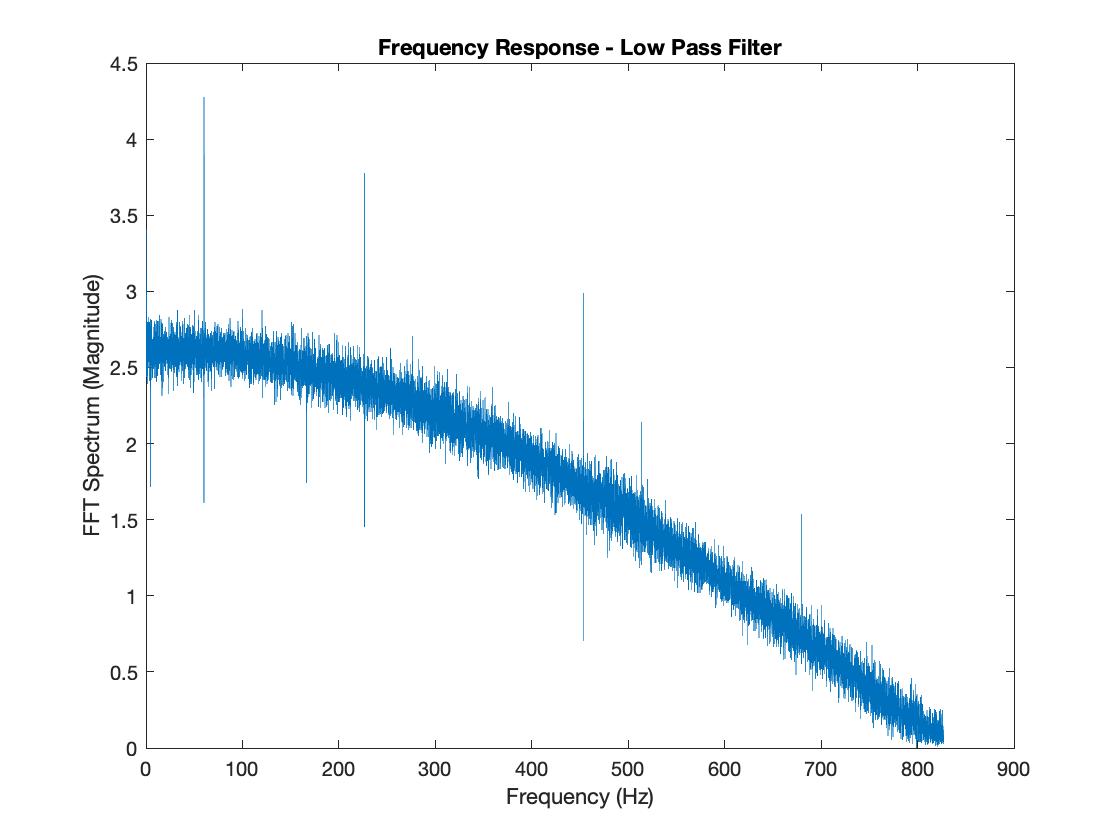

Picture 6: Amplified Low-Pass Microphone Graph

The most prominent observations that I made for the comparisons in these two graphs were that the noise level of the experimental filter response was more prominent than with the simulated filter response, and the amplitudes slightly differed. Additionally, the attenuation beyond the cut-off frequency for the experimental filter response is less gradual than that of the simulated filter response. I believe that these differences are due to the fact that the theoretical model does not account for the noise interferences from the ambient environment or the imperfections in the continuous voltage signal being fed into the experimental filter, so the data curve is smoother. Also, the volume of my speakers is adjustable, while the amplitude is set to a variable in the theoretical model.

Picture 7: Amplified High-Pass Microphone Graph

The theoretical response curve was a bit more jagged than I would have anticipated, but the general direction and trend of the theoretical response curve were not far from my expectation. Something that I noticed, similarly to the low-pass graphs, was that the noise level of the experimental filter response was more prominent than with the simulated filter response, and the amplitudes slightly differed between the two curves. There was a shaper attenuation with experimental filter response than I would have anticipated from looking at the theoretical response curve. I think that the reasoning behind these observations is similar to that of the low pass filter; the theoretical model doesn't factor in the sources of noise interferences in the responses, and the volume of my speakers is adjustable, while the amplitude is set to a variable in the theoretical model.

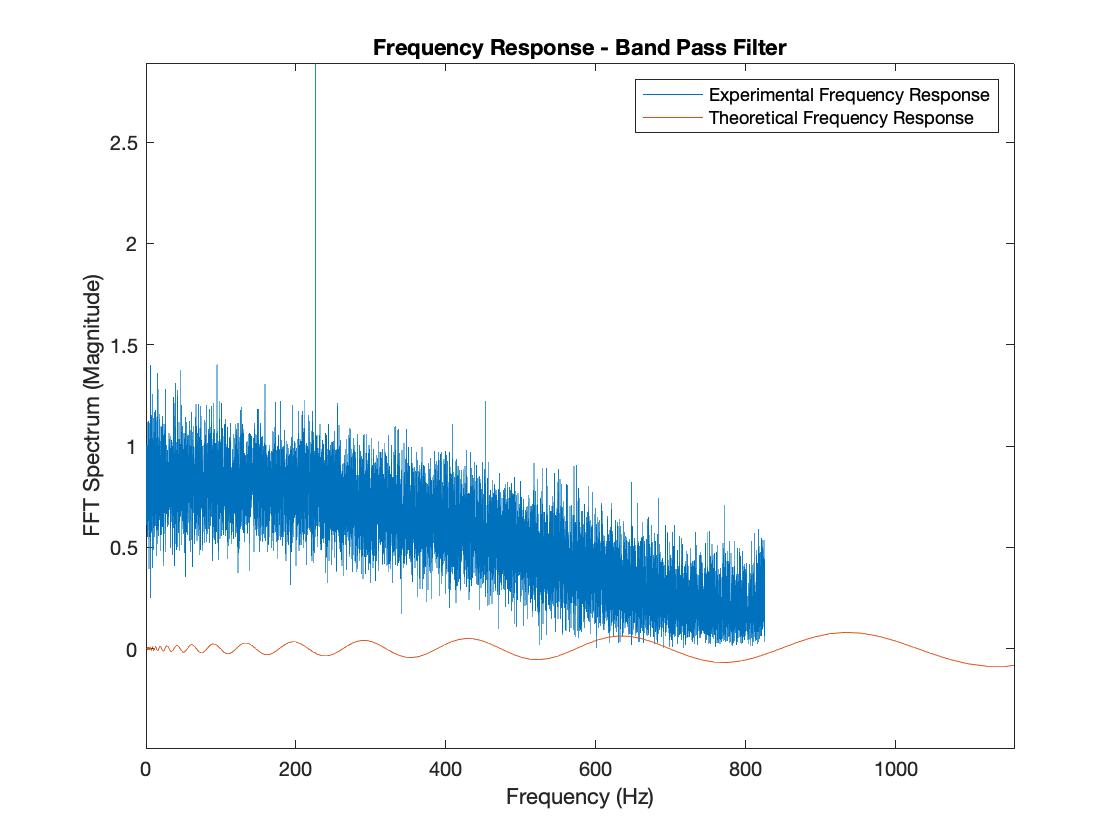

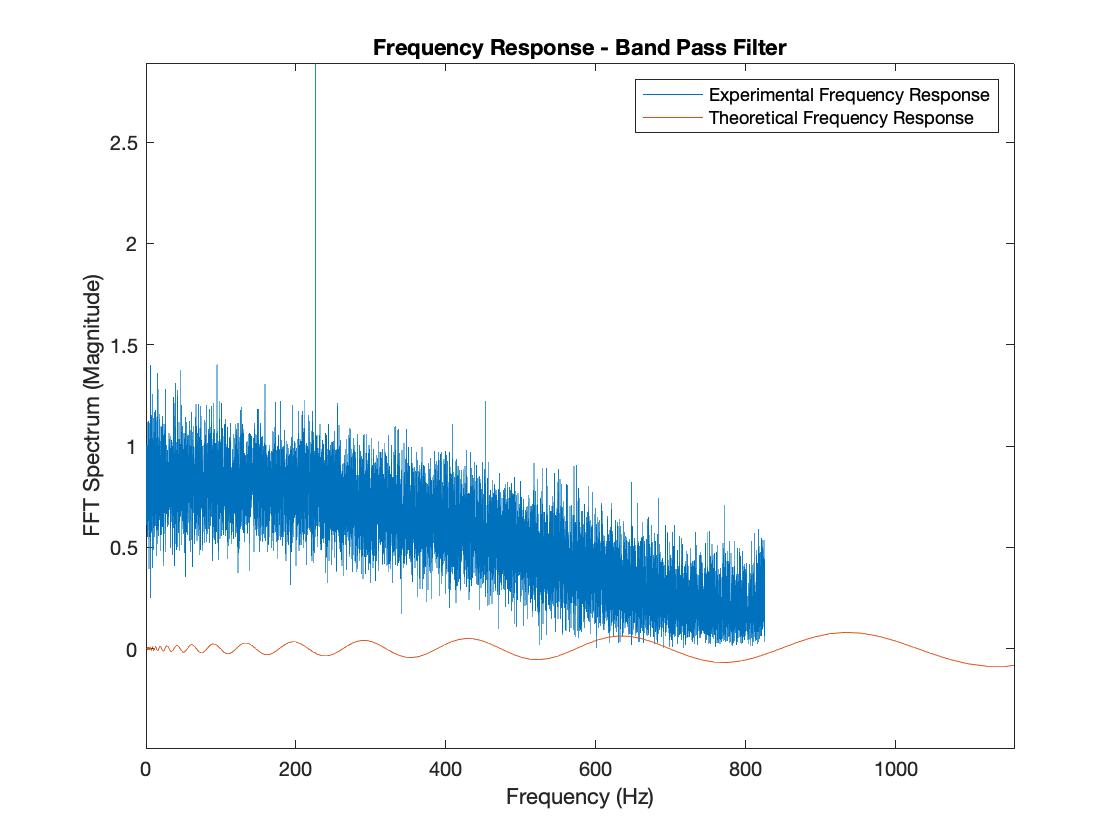

Picture 8: Amplified Band-Pass Microphone Graph

My experimental curve for the bandpass filter seems to take on the same shape as the theoretical bandpass filter, with the difference being that the experimental curve has more noise, a larger amplitude, and seems to attenuate at a different (slower) rate judging by the sector that was captured by Matlab. Other than the causes of an imperfect, unideal environment that I previously mentioned, I believe that the signal processing and timing that goes into capturing the signal for Matlab is a culprit for the differences between the theoretical bandpass filter and the experimental bandpass filter.

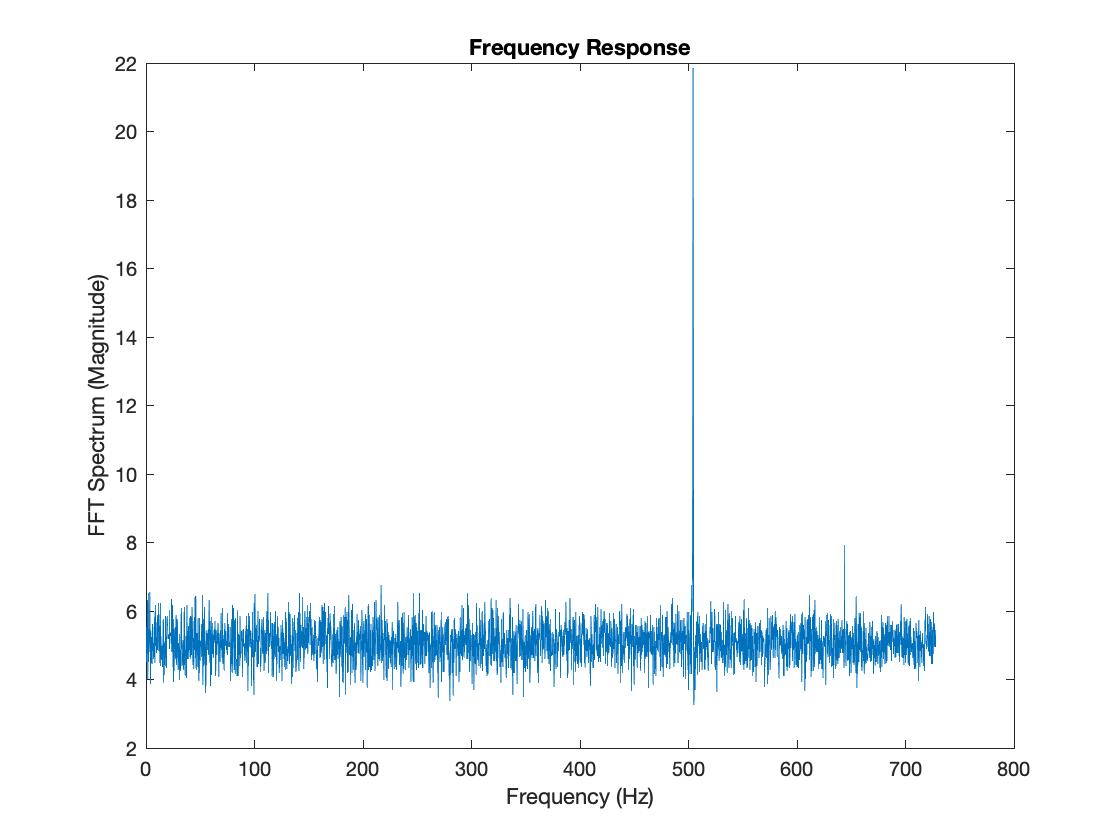

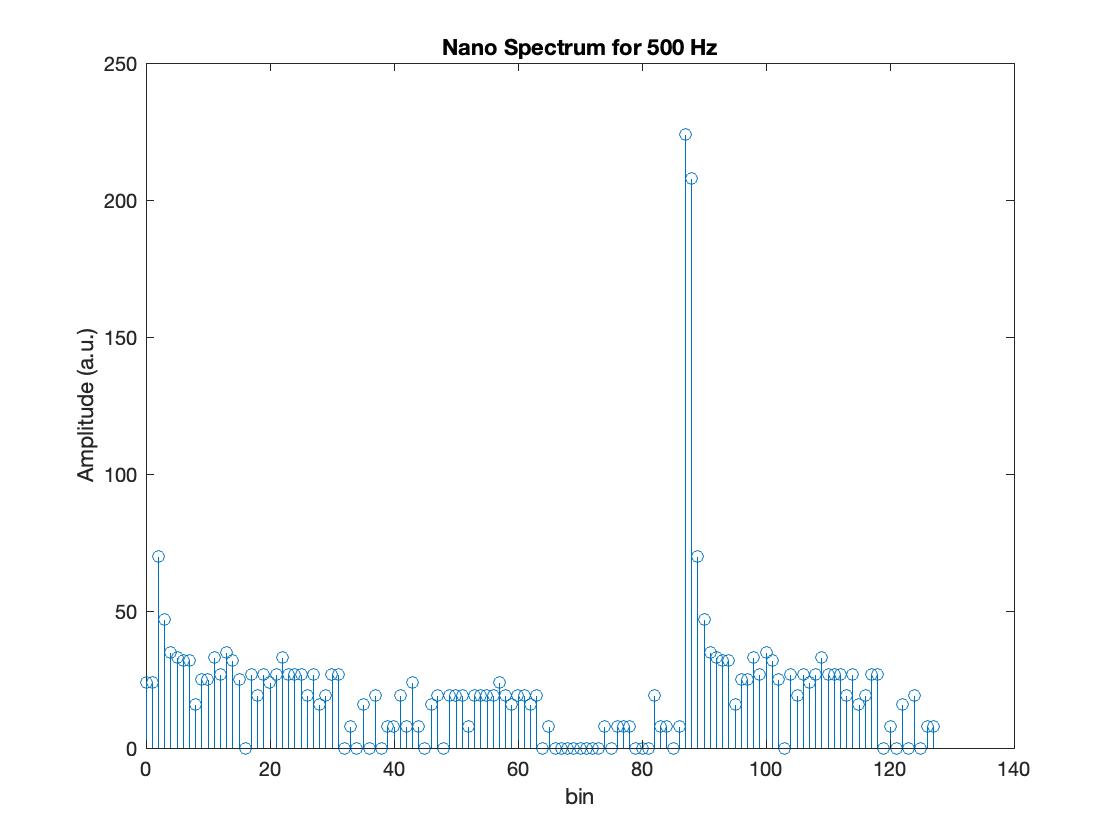

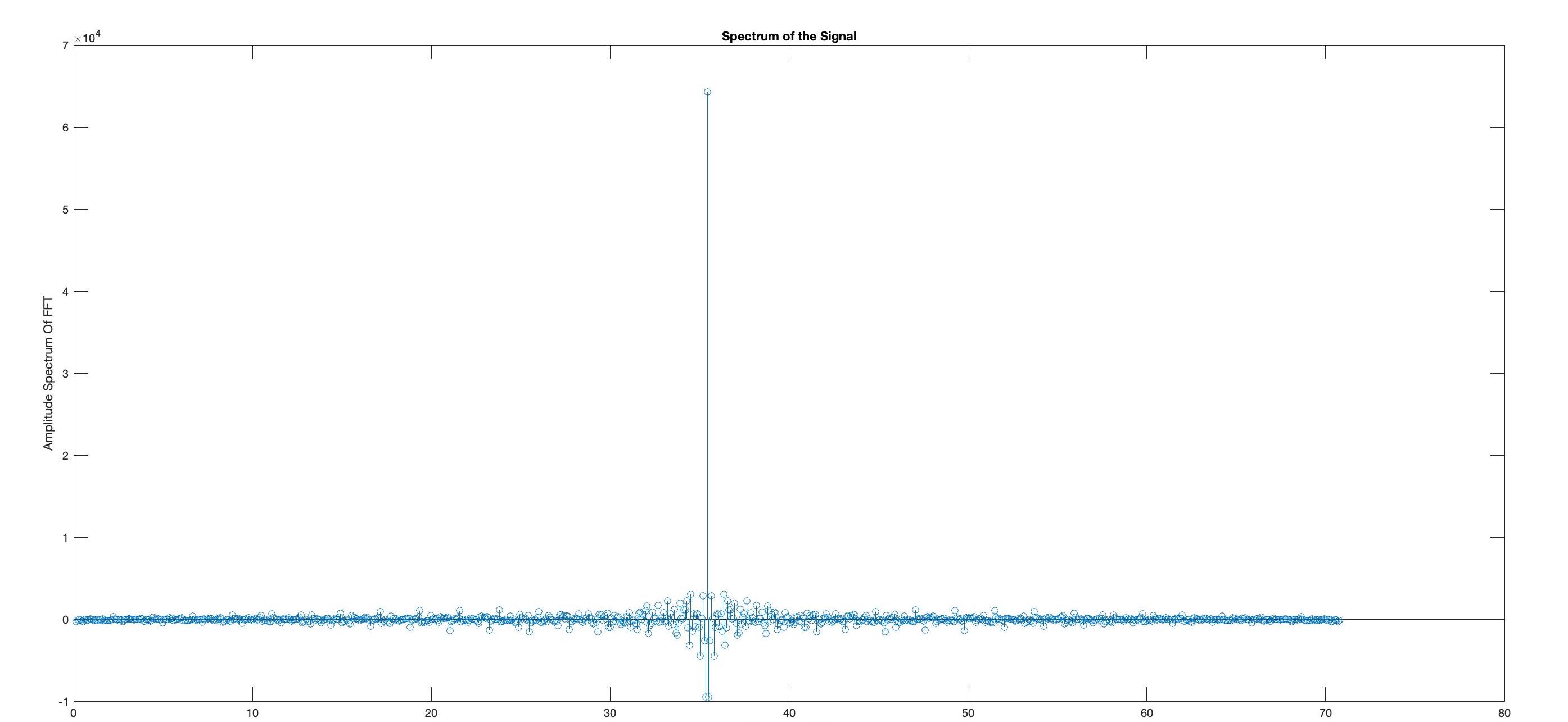

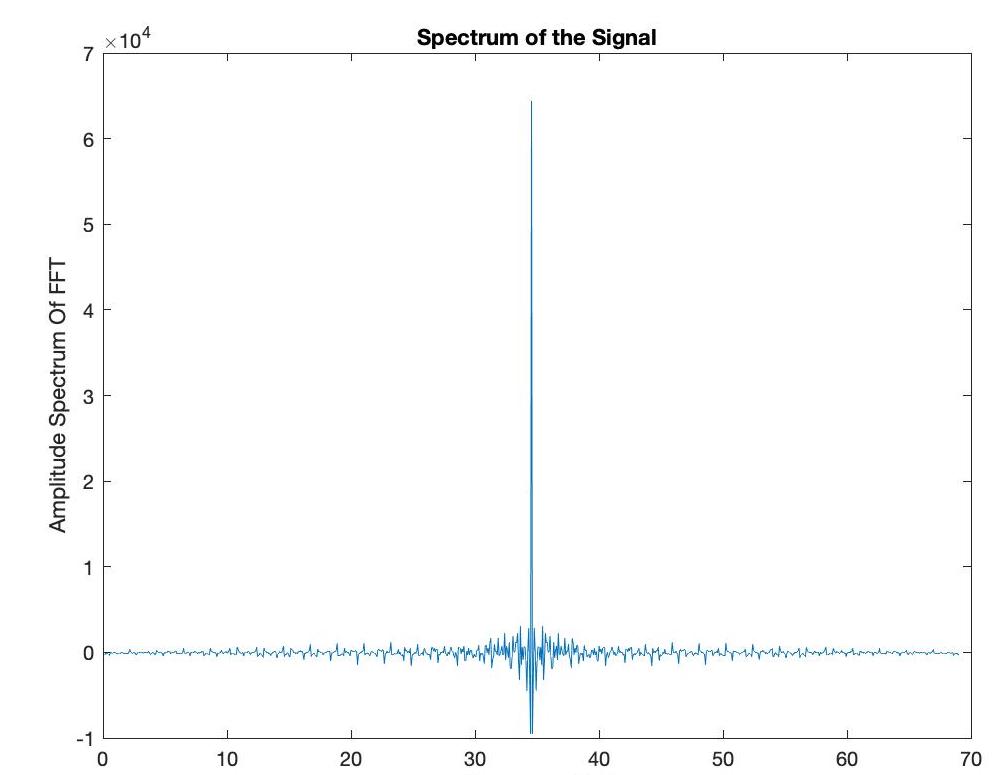

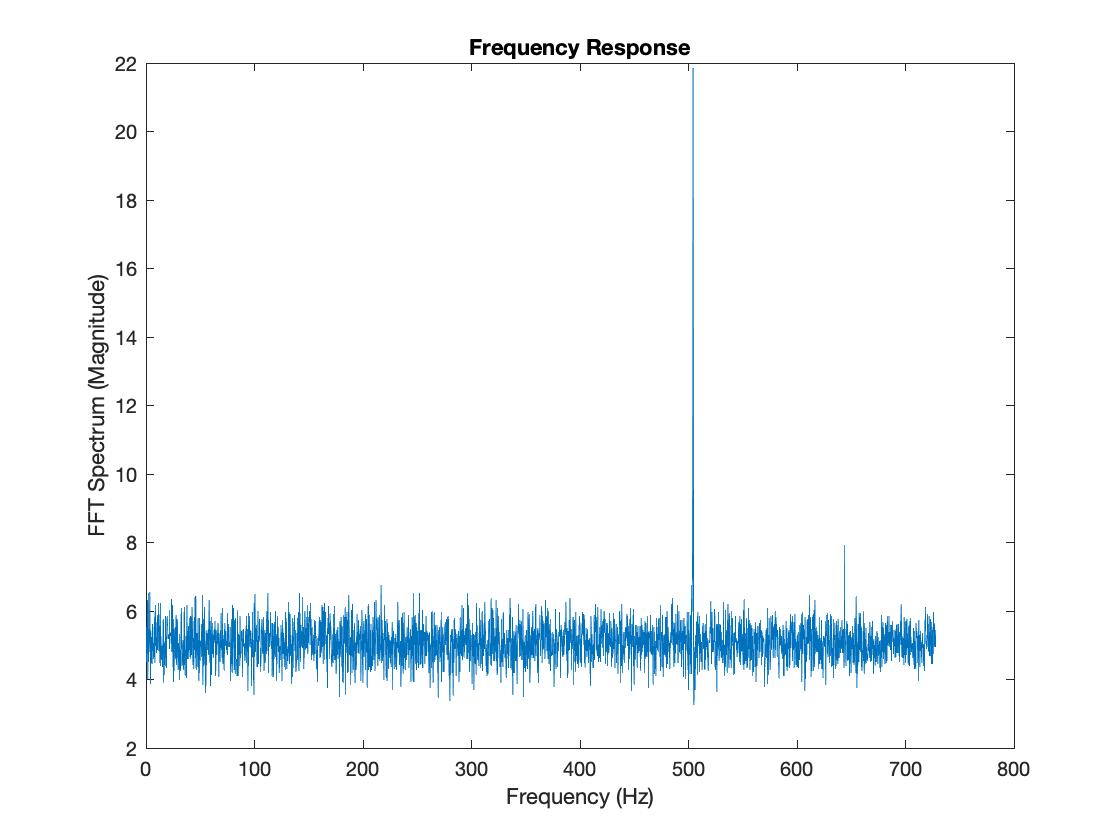

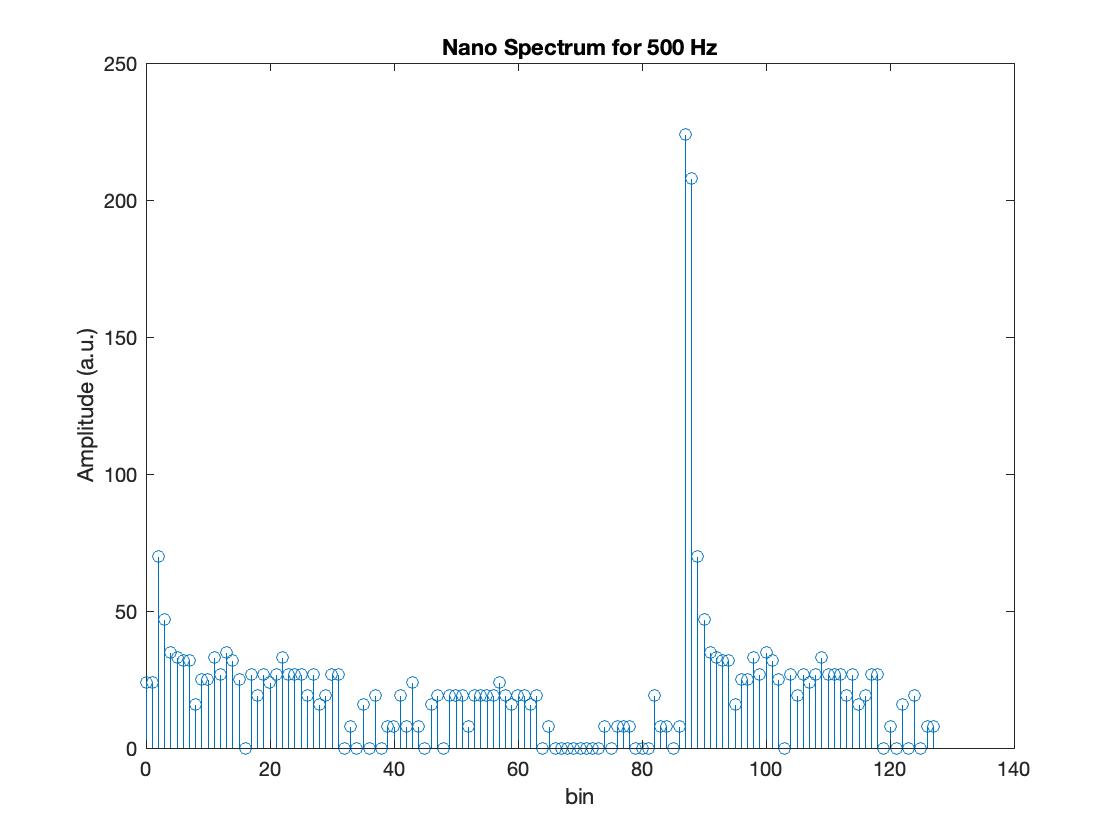

Picture 9: The spectrum obtained with the Nano for a sound frequency of 500 Hz

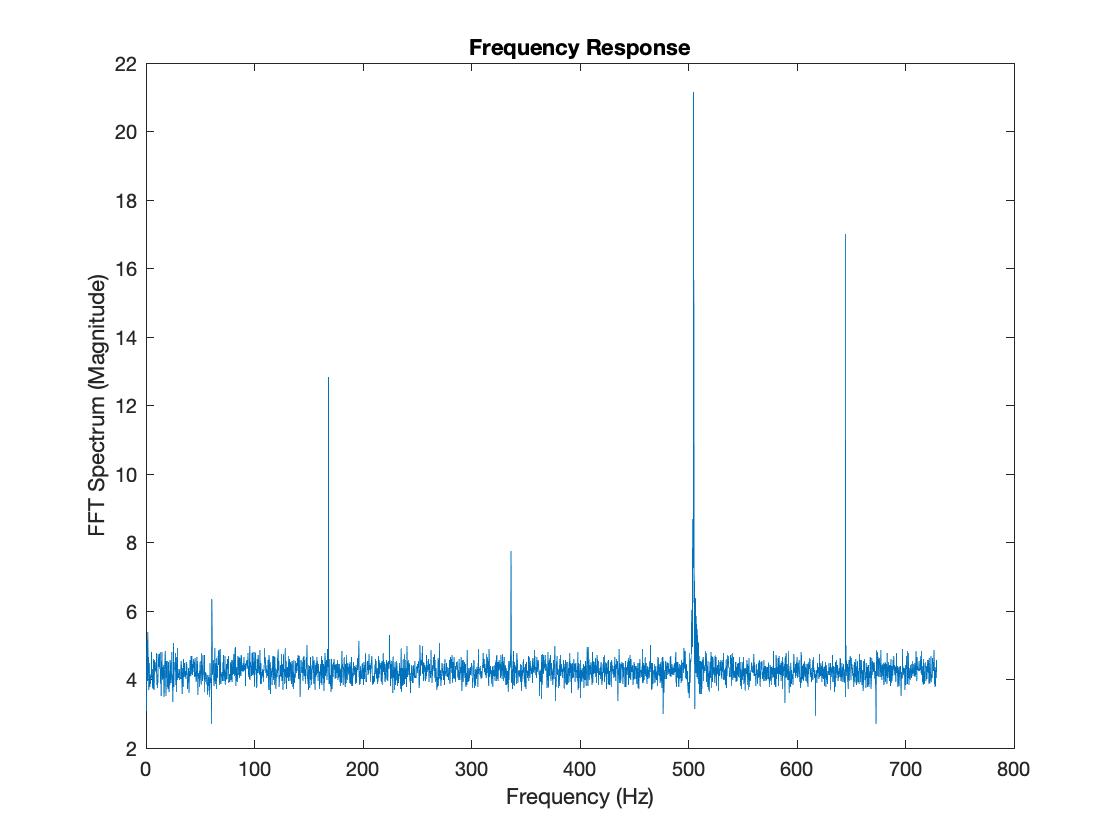

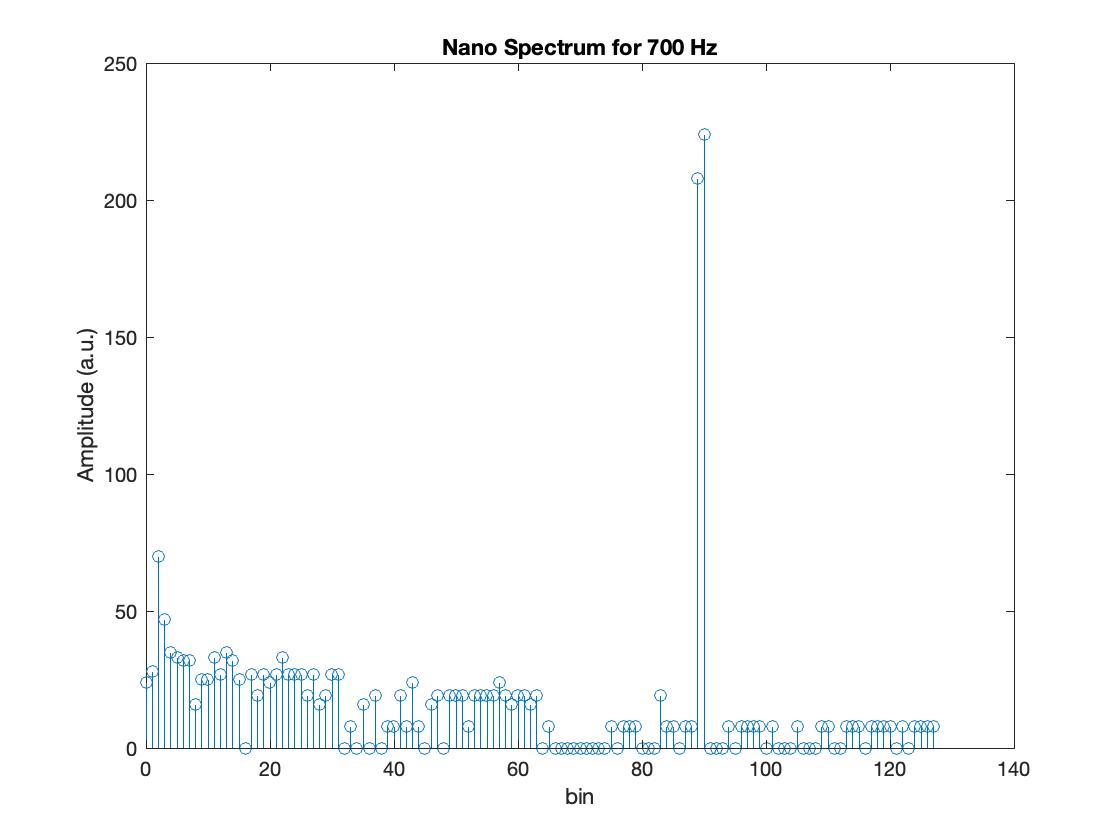

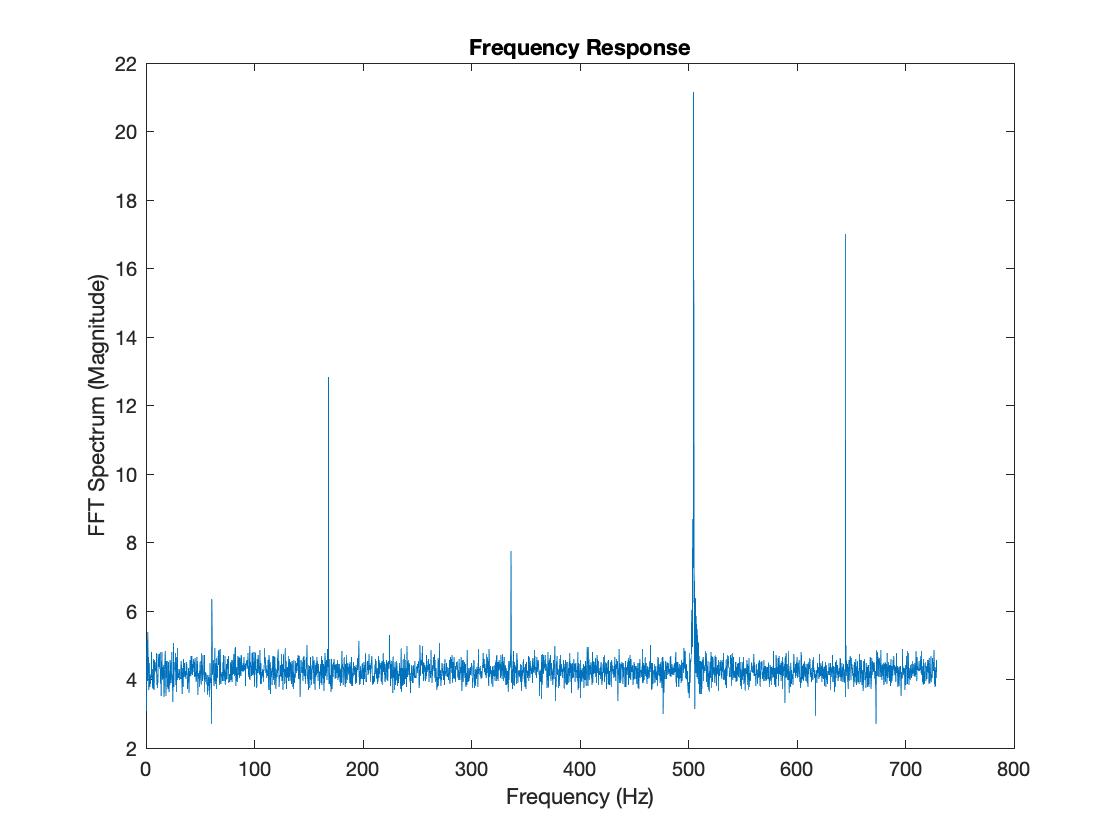

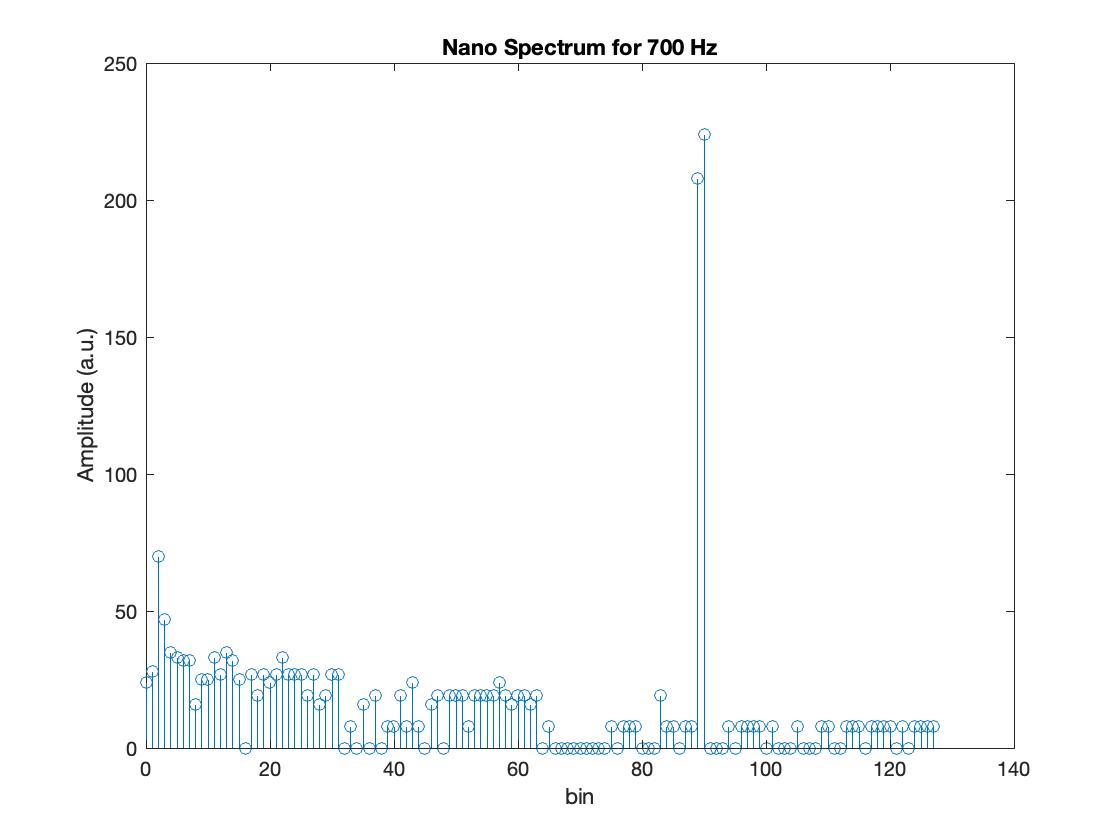

Picture 10: The spectrum obtained with the Nano for a sound frequency of 700 Hz

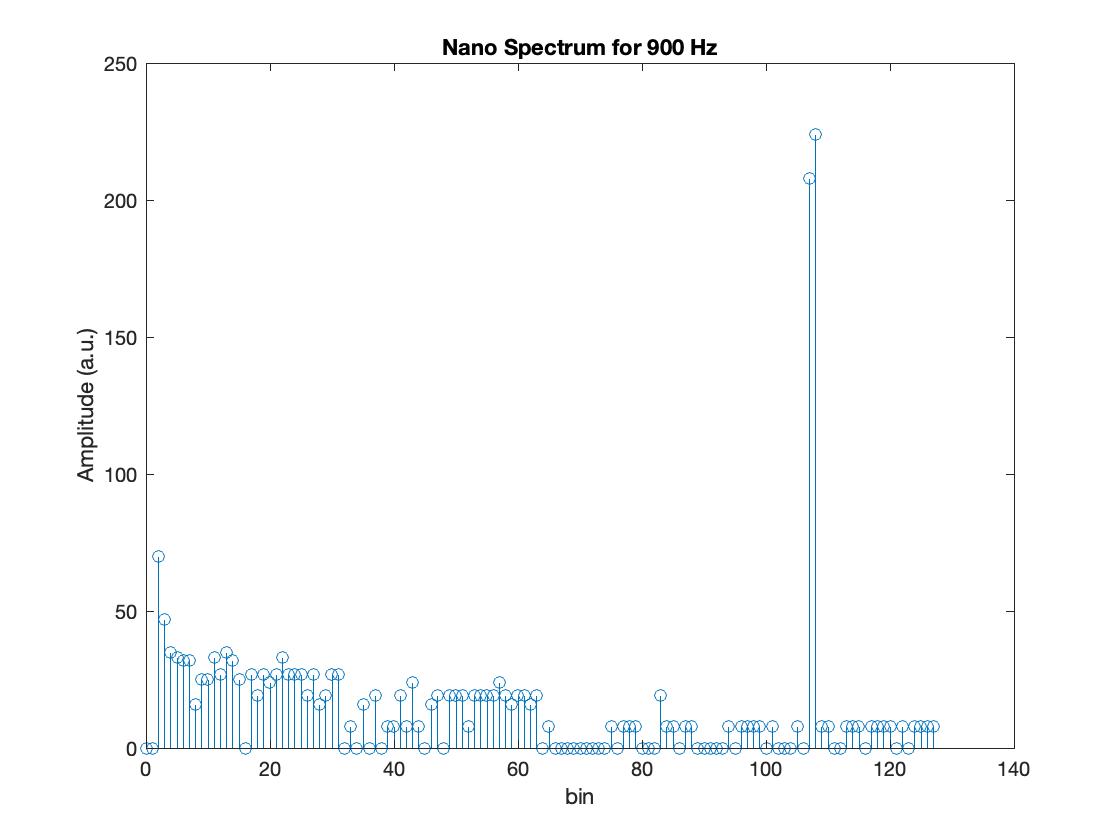

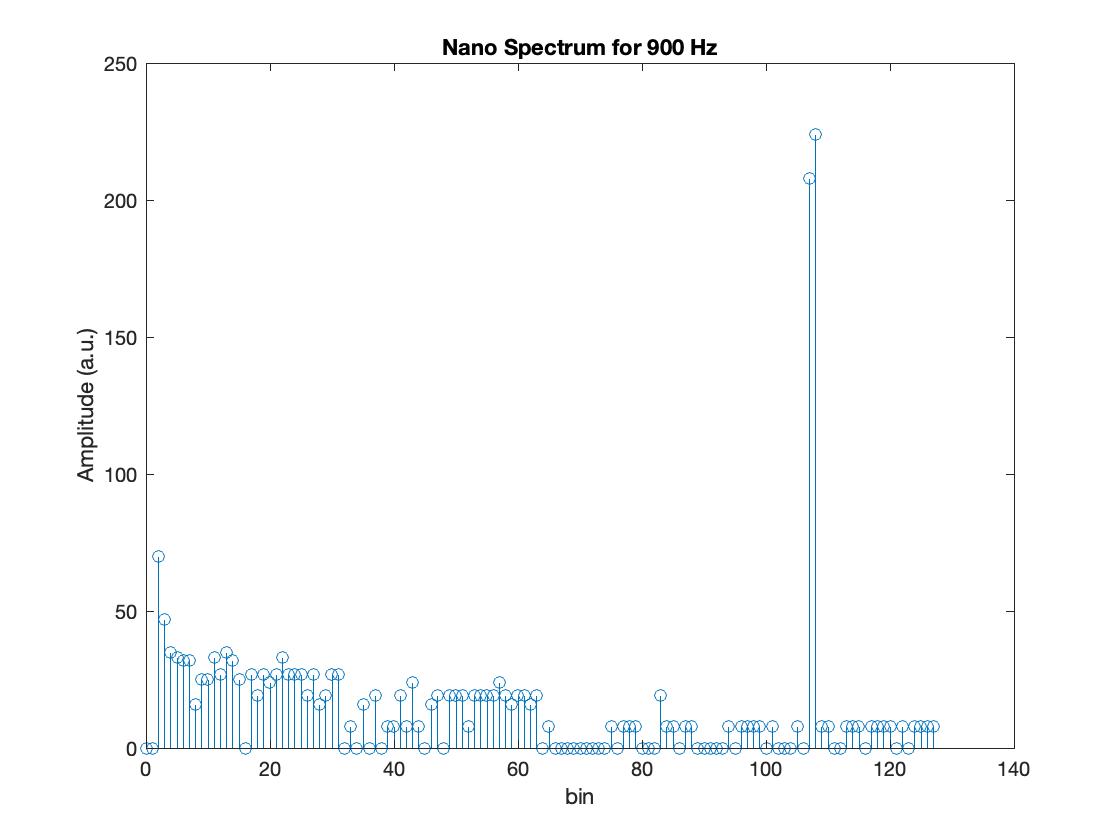

Picture 11: The spectrum obtained with the Nano for a sound frequency of 900 Hz

Summary & Takeaways

In Lab 3, my biggest takeaway was that the seemlingly more complicated method of doing things may be the most efficient and rewarding. Even though I had to redo this lab a few times to get it, I'm very glad that I did!

Next Steps

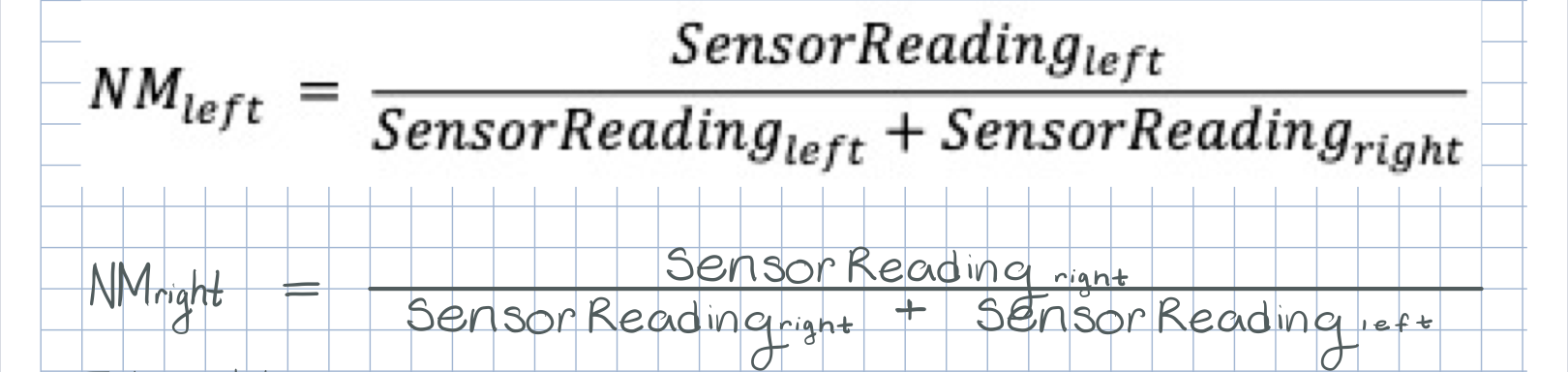

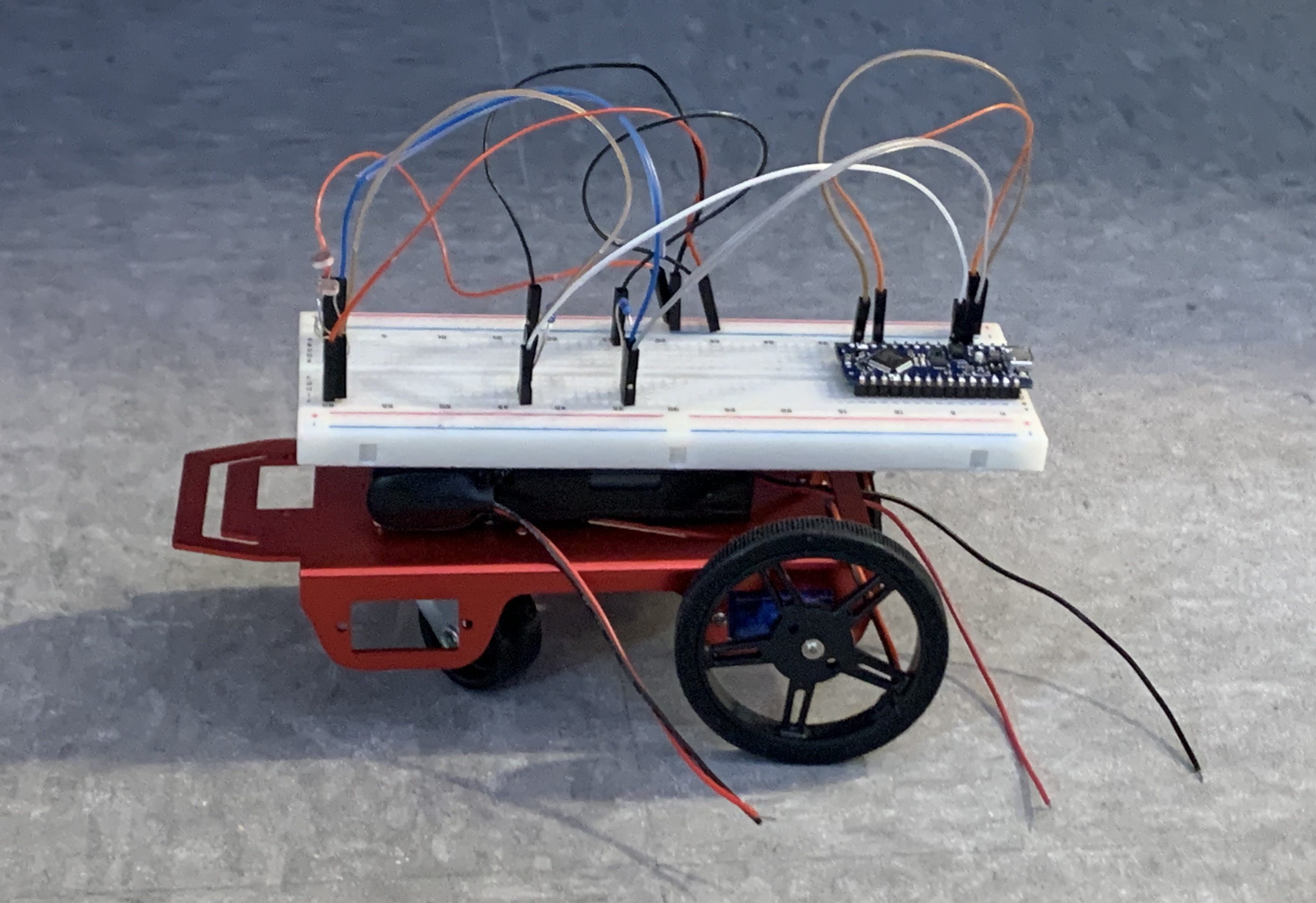

Now that I have completed this lab, my next step is to figure out how to use the bandpass filter in Lab 4.